- Home

-

Articles & Issues

-

Data

- Dataset of Radar Detecting Sea

- SAR Dataset

- SARGroundObjectsTypes

- SARMV3D

- 3DRIED

- UWB-HA4D

- LLS-LFMCWR

- FAIR-CSAR

- MSAR

- SDD-SAR

- FUSAR

- DatasetinthePaper

- SpaceborneSAR3Dimaging

- DatasetintheCompetition

- Sea-land Segmentation

- SAR-Airport

- Hilly and mountainous farmland time-series SAR and ground quadrat dataset

-

Report

-

Course

-

About

-

Publish

- Editorial Board

- Chinese

| Citation: | HUANG Zhongling, YAO Xiwen, and HAN Junwei. Progress and perspective on physically explainable deep learning for synthetic aperture radar image interpretation[J]. Journal of Radars, 2022, 11(1): 107–125. doi: 10.12000/JR21165 |

Progress and Perspective on Physically Explainable Deep Learning for Synthetic Aperture Radar Image Interpretation(in English)

DOI: 10.12000/JR21165 CSTR: 32380.14.JR21165

More Information-

Abstract

Deep learning technologies have been developed rapidly in Synthetic Aperture Radar (SAR) image interpretation. The current data-driven methods neglect the latent physical characteristics of SAR; thus, the predictions are highly dependent on training data and even violate physical laws. Deep integration of the theory-driven and data-driven approaches for SAR image interpretation is of vital importance. Additionally, the data-driven methods specialize in automatically discovering patterns from a large amount of data that serve as effective complements for physical processes, whereas the integrated interpretable physical models improve the explainability of deep learning algorithms and address the data-hungry problem. This study aimed to develop physically explainable deep learning for SAR image interpretation in signals, scattering mechanisms, semantics, and applications. Strategies for blending the theory-driven and data-driven methods in SAR interpretation are proposed based on physics machine learning to develop novel learnable and explainable paradigms for SAR image interpretation. Further, recent studies on hybrid methods are reviewed, including SAR signal processing, physical characteristics, and semantic image interpretation. Challenges and future perspectives are also discussed on the basis of the research status and related studies in other fields, which can serve as inspiration.

-

1. Introduction

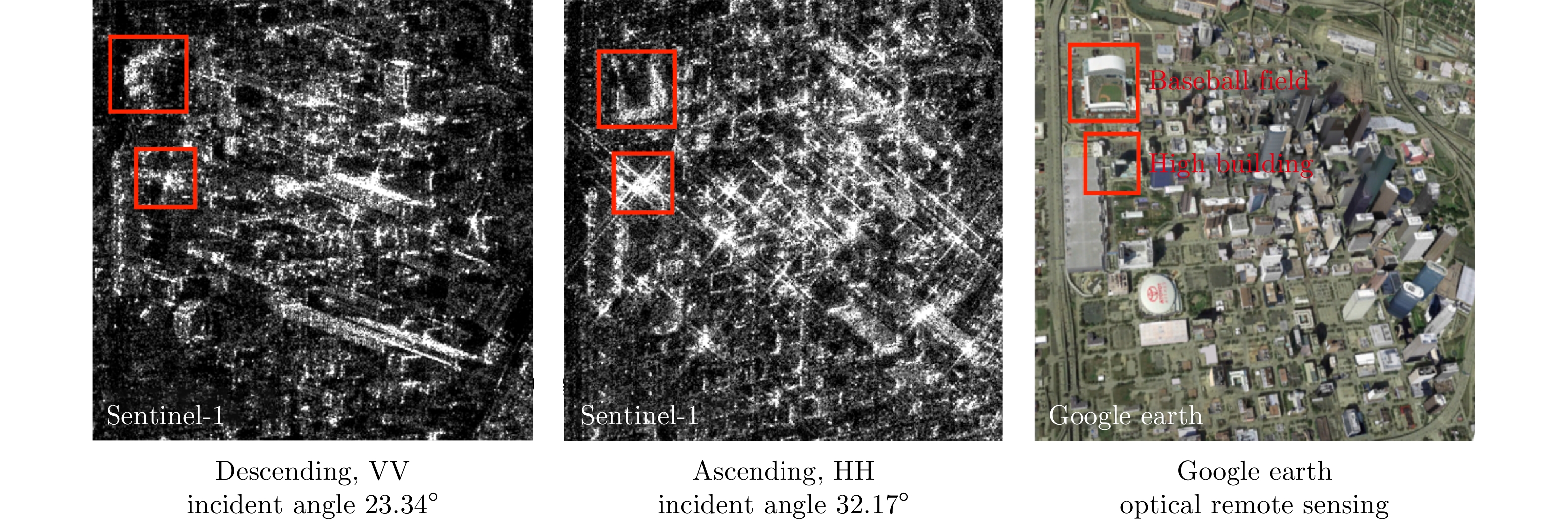

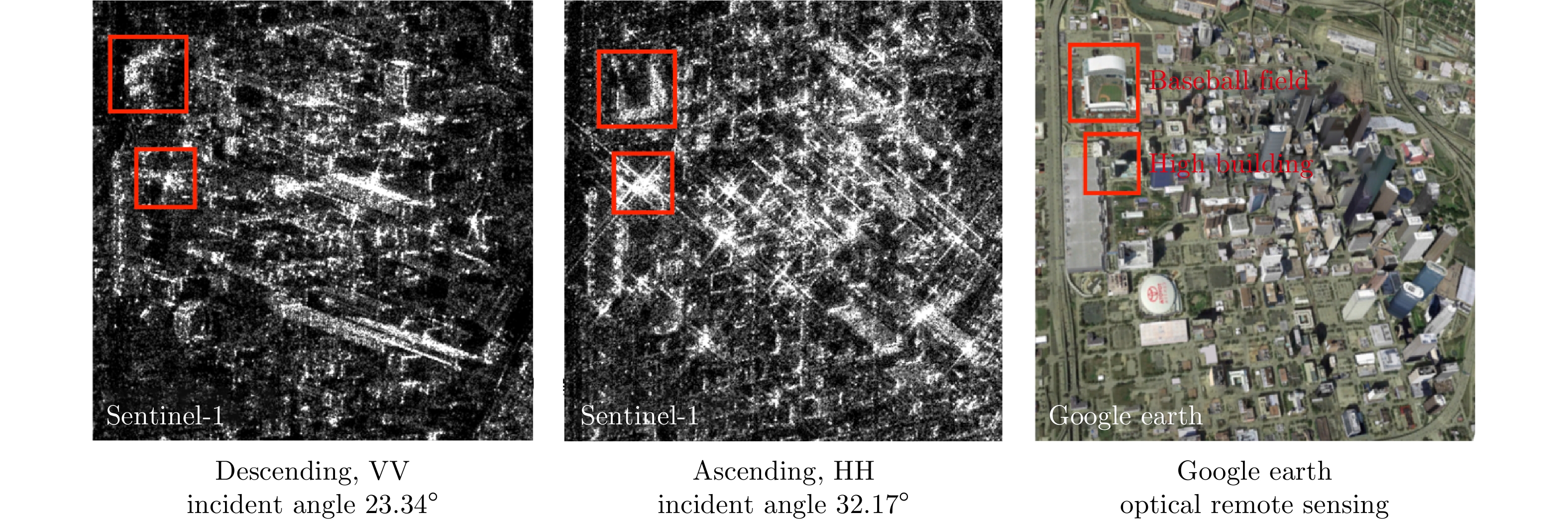

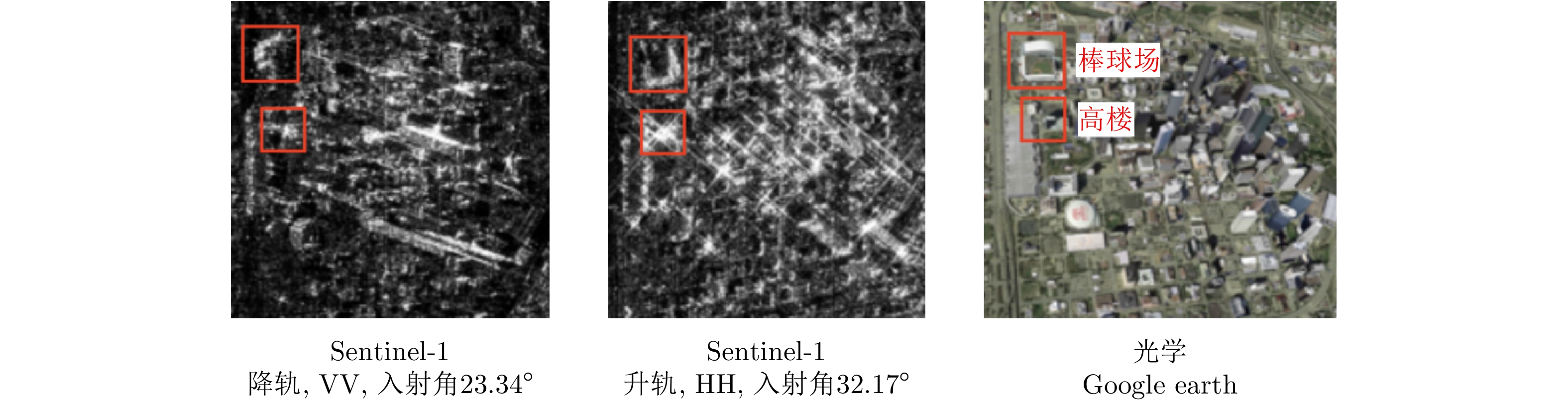

As an active microwave sensor, Synthetic Aperture Radar (SAR) can perform all-day, all-weather imaging without being influenced by light and climate, thus having important application value in military and civilian Earth observation fields. The difference between SAR and optical remote sensing techniques such as visible light and infrared is that it actively emits electromagnetic waves to modulate ground objects. It receives backscattering in the form of echo signals, and two-dimensional SAR images are generated through imaging processing algorithms[1]. Therefore, SAR images reflect the microwave characteristics of ground objects, and imaging results are influenced by various factors, such as wavelength, incident angle, and polarization mode, and are also closely related to the structure, arrangement, and material characteristics of the target. They are distinct from optical images, with which the human visual system is more familiar, as shown in Fig. 1[2].

SAR image interpretation faces many challenges. Experts usually need to understand SAR imaging mechanisms, microwave scattering characteristics, and other background knowledge to accurately interpret and interpret ground objects in SAR images[3]. The design of traditional SAR image interpretation methods is mostly based on rich expert knowledge and theoretical models[4-6], and these methods have strong interpretability. However, the features of manual design tend to be biased to SAR image characteristics in a certain aspect, which requires domain knowledge, and the design process is time-consuming. With the vigorous development of artificial intelligence technology, Deep Learning (DL)-based methods have gradually become the mainstream in this field in recent years. DL can build an end-to-end system to extract multi-level features and learn target tasks automatically and simultaneously, thus overcoming the limits imposed by the manual design of features and classifiers and achieving significant performance improvement[7].

Currently, most mainstream DL methods for SAR image interpretation are developed from the field of computer vision and are primarily focused on the visual information of SAR amplitude images. A Convolutional Neural Network (CNN) is used to perform automatic feature learning of SAR amplitude images, and the loss function is optimized for specific tasks[7-10]. The advantage of the data-driven approach is that it can automatically learn potential patterns and rules from massive amounts of data. However, SAR image interpretation typically encounters the following challenges in its practical application:

(1) Learnable but difficult to explain: Unquestionably, Deep Neural Networks (DNNs) are capable of learning, but the model is complex and difficult to explain. The model predictions must be highly reliable and credible in specific SAR application fields, such as battlefield monitoring. Given the “black box” nature of the current DL model, decision-makers have difficulty understanding the results, and the technology’s practical application is restricted.

(2) Big data but small annotations: Even though SAR satellites in orbit can provide vast amounts of data, large-scale SAR data labeling is expensive because of the difficulties of visual interpretation. Training DNNs with a small amount of limited labeled data is a major problem that needs to be solved urgently. Even when many high-quality annotated samples are available, the trained model is prone to poor generalization performance on other SAR images with different imaging parameters and other factors[8-10].

(3) Limitation of visual interpretation: The texture information of SAR images is an important basis for SAR interpretation. Because of the unique microwave imaging mechanism, however, visually discriminating some targets with complicated scattering is challenging. DL-based solely on amplitude information cannot properly understand SAR images[11].

We believe that the development of physically explainable DL methods, which are different from computer vision, plays an important role in resolving the aforementioned issues. Such development is of great significance in mining the physical intelligence of microwave vision[12]. The proposed Physically Explainable DL (PXDL) in this paper aims to establish a hybrid modeling paradigm, which combines the physical model or interpretation expertise of SAR with DL to improve the explainability of the model itself. The data-driven strategy has a high data utilization rate, while the theoretical model is highly interpretable. Through hybrid modeling, the two benefits can complement one another, contributing to more transparent algorithms, enhancing interpretability, and reducing the dependence on labeled samples. It also promotes the development of the third generation of artificial intelligence[13]. Explainable Artificial Intelligence (XAI) is one of the leading research directions in the field of AI, and much work has been conducted on explainable DL (XDL)[14-18]. One of these is post-hoc, which employs interpretable analysis methods to describe the model after it has been constructed. Relevant work in the field of SAR can be referred to in the Refs. [19-21]. The proposed PXDL is more prone to the category of self-explanatory models in XDL, which can be regarded as an application technology for SAR image interpretation. It constructs an interpretable AI system for SAR by means of approaches such as physics-based machine learning.

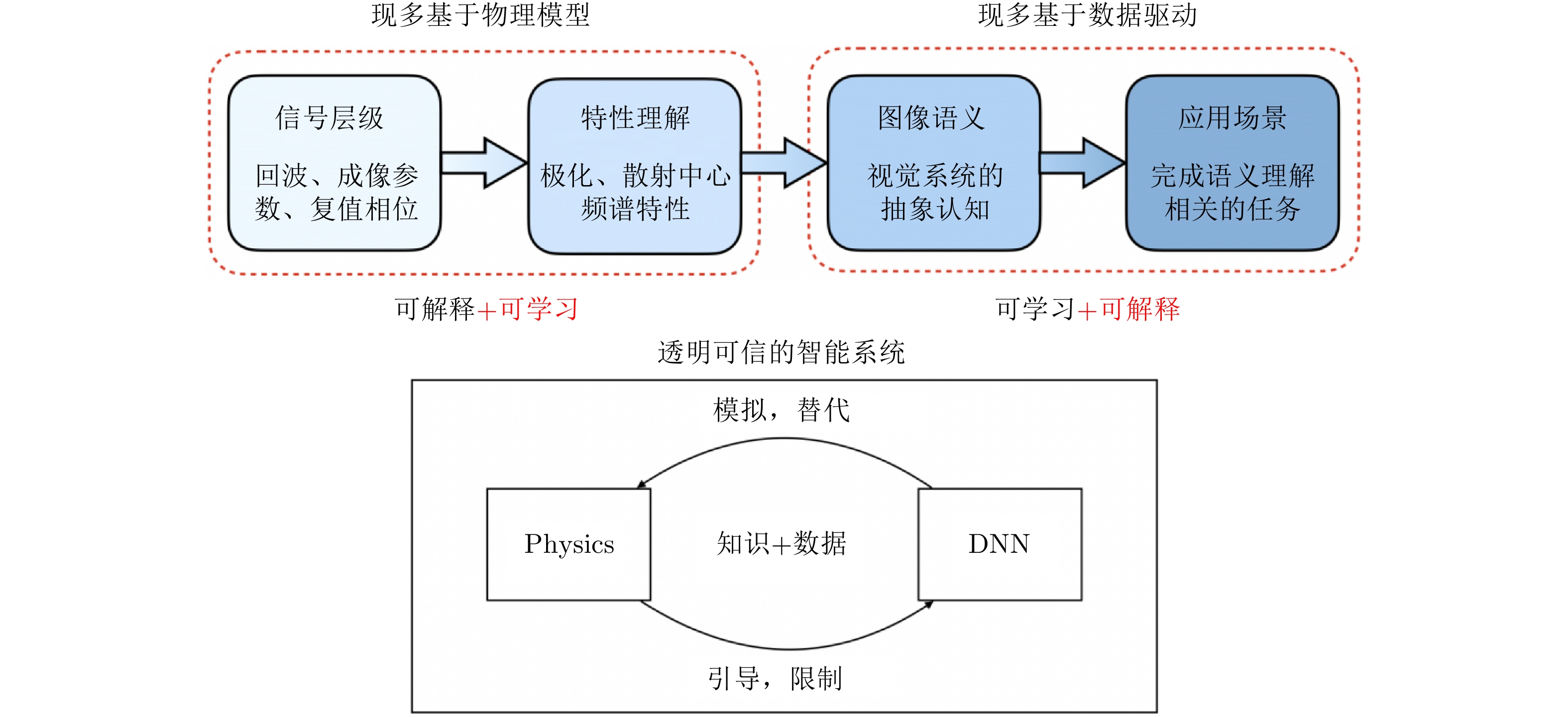

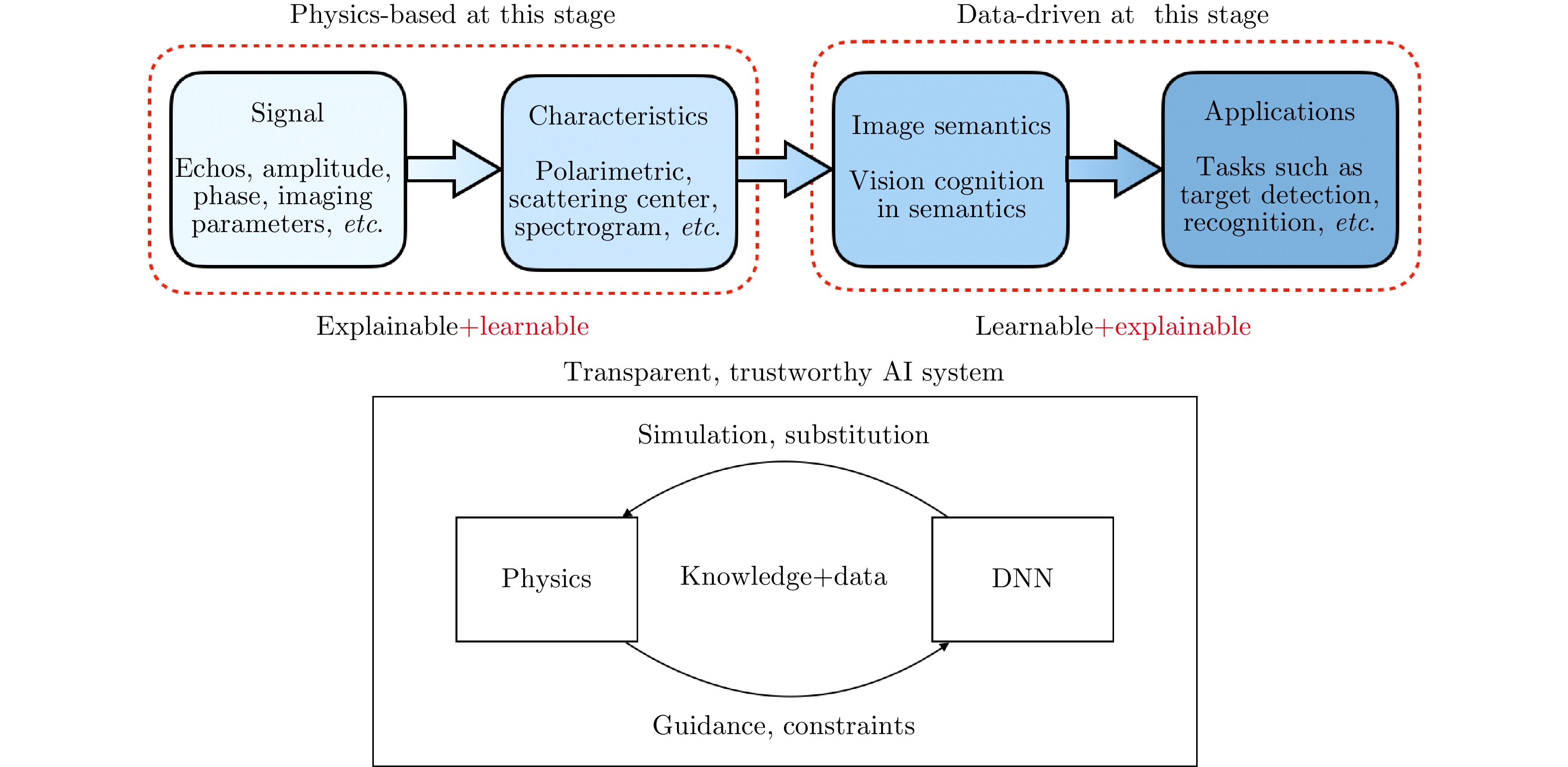

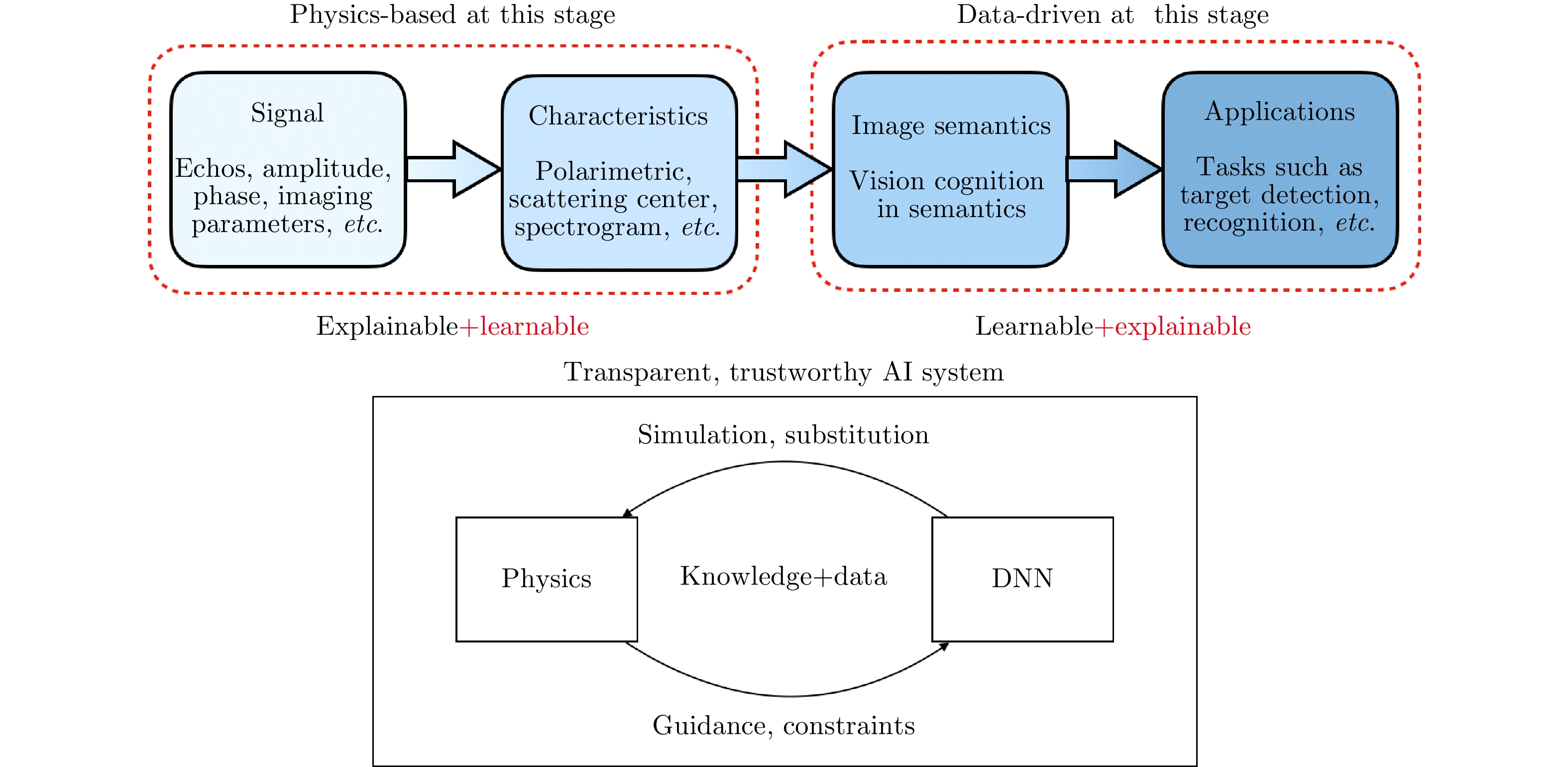

In contrast to traditional DL concepts based on computer vision, which emphasize image interpretation, PXDL methods should construct a new learnable and interpretable paradigm in multiple dimensions. Inspired by the cognitive process of experts, we propose to interpret SAR images from signal and characteristics to image semantics and applications, shown in Fig. 2. Besides extracting SAR image features automatically with DL to achieve end-to-end prediction, other important tasks are to learn the SAR imaging mechanism, clarify the impact of imaging conditions on results, and understand the physical scattering characteristics of targets. Most of these processes are currently completed based on interpretable physical models, but some of them rely on assumptions and approximations, making it challenging to describe complicated circumstances accurately[4]. If the physical model is insufficient or difficult to derive, then the data-driven methods can be used for simulation or replacement, as well as for automatic physical parameter estimation. Some existing knowledge can be used as a constraint during optimization to prevent the model from learning results contrary to the physical model. The SAR physical model or expert knowledge can also promote traditional data-driven algorithms. They can be reasonably used to guide the DNN to conduct autonomous learning, thus maximizing the role of a large number of unlabeled samples, to obtain a model with stronger generalization ability and physical perception ability, and ensure the physical consistency of interpretation results.

In this paper, the new research direction of physics-based machine learning is briefly introduced in Section 2. Then, the basic ideas of how to develop physically explainable DL are summarized in Section 3. The advances in physics-data hybrid modeling for different SAR applications in the last two or three years are reviewed in Section 4 and Section 5. Finally, the outlook of PXDL technology for SAR and the conclusion are presented in Section 6 and Sections 7, respectively.

2. Physics-based Machine Learning

Physics-based Machine Learning (Physics-ML) is a newly proposed type of machine learning that aims to embed physical knowledge into machine learning models (primarily DNNs) to solve ill-conditioned problems or inverse problems to improve model performance, accelerate the solution, and enhance generalizability. In numerous domains, including fluid mechanics and aerodynamics, its applications have yielded excellent results[22]. Physics-informed machine learning is widely utilized in the field of nonlinear Partial Differential Equations (PDE)-based physical processes. Thuerey et al.[23] introduced this in-depth, where the primary technique is to translate PDE-based physical processes into a neural network that utilizes the automatic derivation mechanism to optimize the physical process embedded in DNN. The physics-informed neural network framework proposed by Raissi et al.[24] has become among the most prevalent Physics-Informed Neural Network (PINN) techniques, followed by some improvements and related applications[25,26].

In the field of earth science and remote sensing, Refs. [27-30] reviewed methodologies and applications that integrate machine learning/DL with physical models. The mainstream ideas include improving the objective function and developing a hybrid model with applications in hydrology models, radiative transfer processes, and climate change. For example, the Physics-Guided Neural Network (PGNN) learning framework proposed by Refs. [31,32] inputs the prediction results of the physical model together with the observation data into the neural network and uses it to constrain the neural network training. Similarly, Ref. [33] applied it to atmospheric convection prediction. Some works related to the topic discussed in this paper include applications in optical remote sensing imagery and seismic wave interpretation given in Refs. [34,35], as well as applications in beamforming[36,37]. In contrast, the physical models of SAR are more intricate, and research on physics-based machine-learning Refs.for SAR is still in its infancy. Thus, it was not included in the aforementioned summary Refs. [27–30]. In addition, a recent review article[38] about DL in SAR lacks an overview and discussion of the research state in this field.

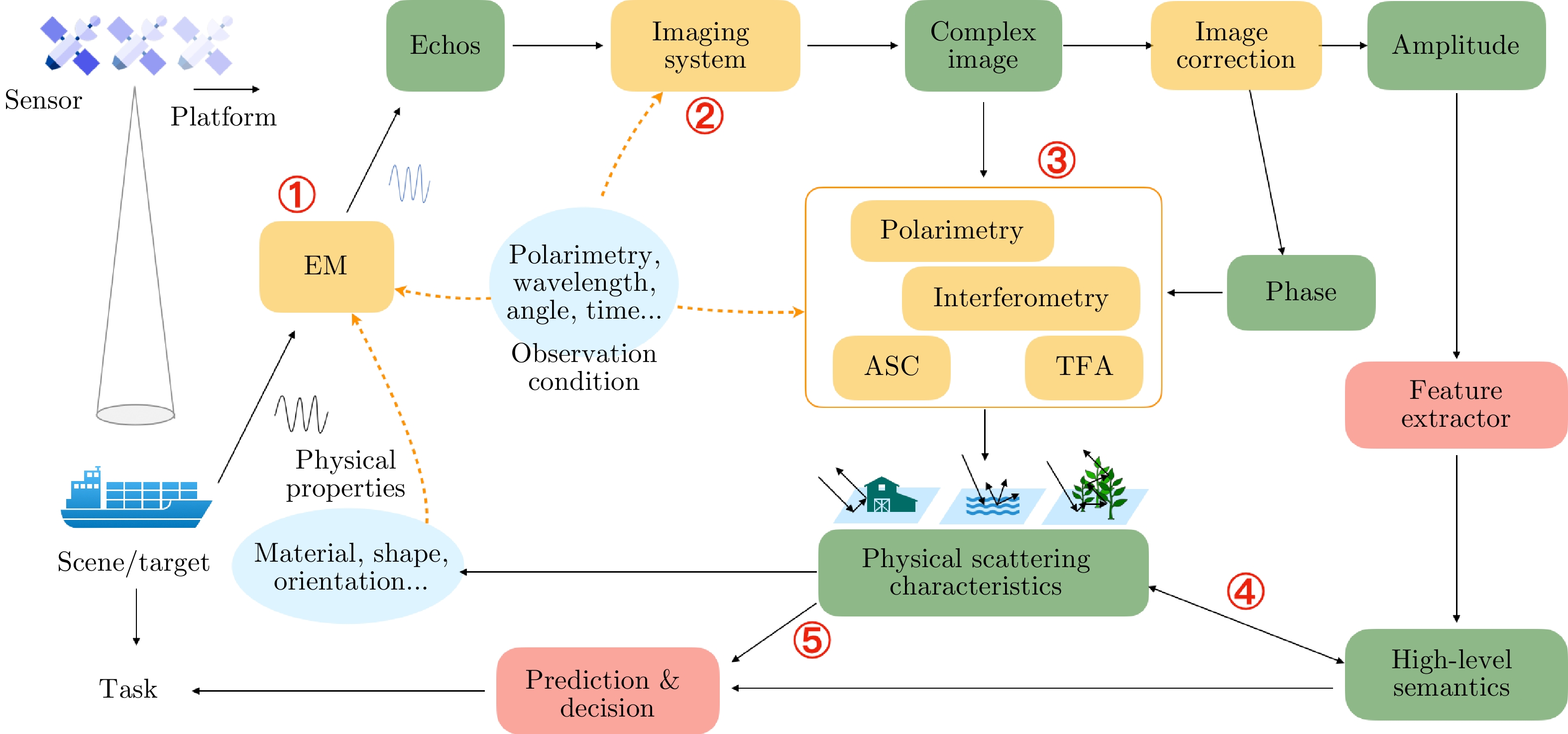

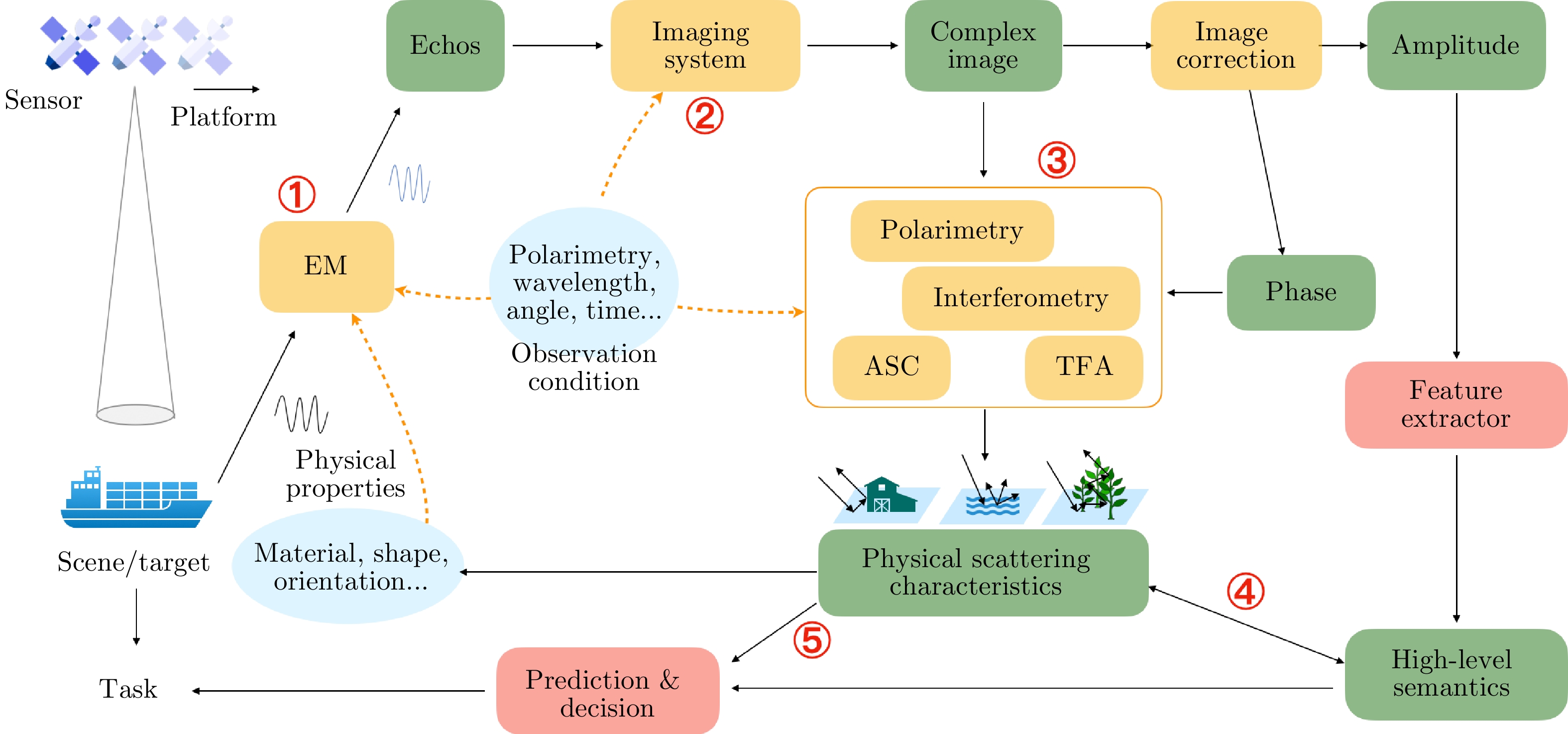

3. Physically Explainable Deep Learning for SAR

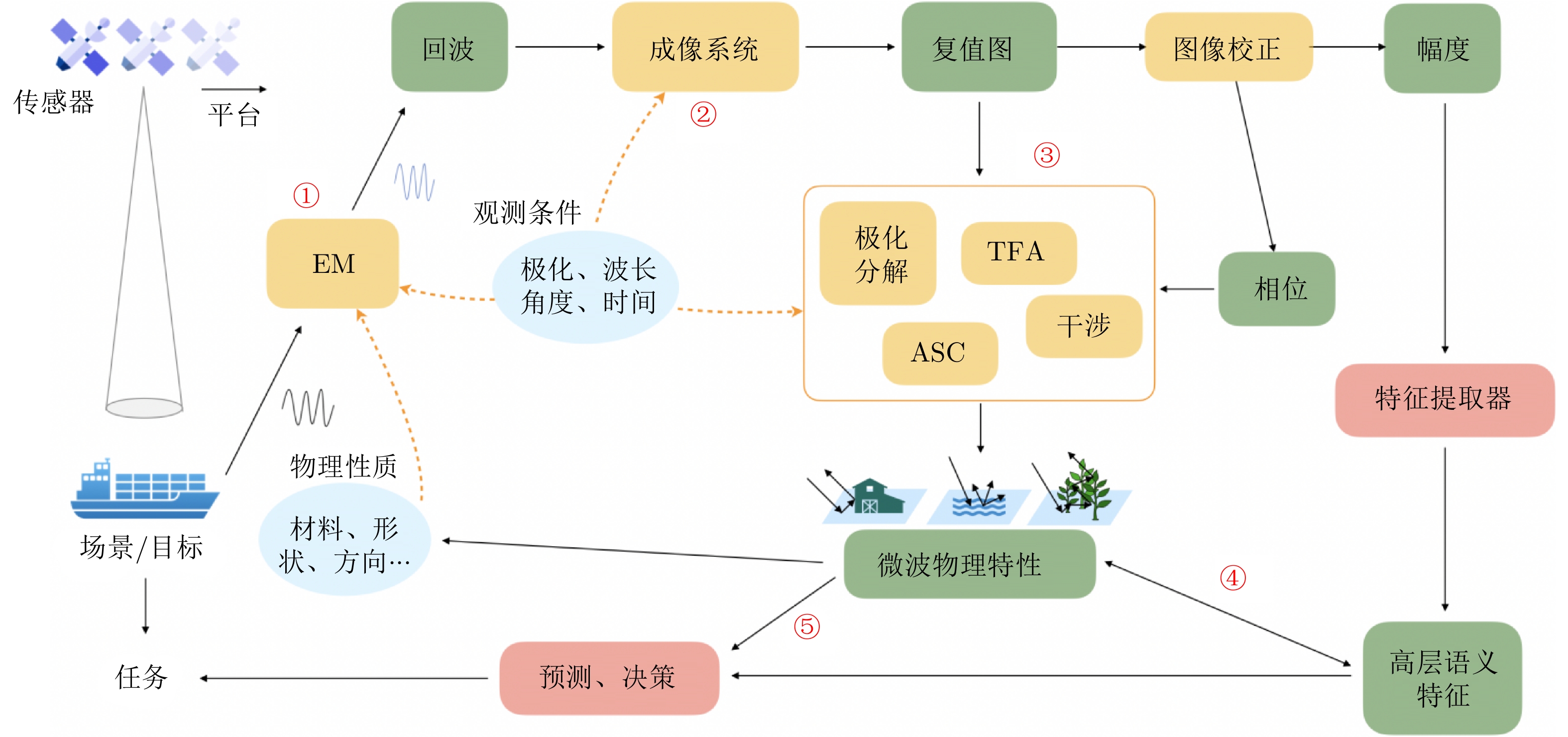

Fig. 3 depicts the SAR image interpretation process indicated in Fig. 2 in detail, where yellow represents the physical models, green represents the input and output of each module, red represents modules that are typically implemented by DNNs, and blue represents the parameter set. The potential PXDL implementation concepts for SAR image interpretation tasks are indicated by numbers in Fig. 3. ①, ②, and ③ represent substituting or simulating a physical process and solving the parameters of the physical model using data-driven methods, as described in Sections 3.1, 3.2, and 3.3.1. ③, ④, and ⑤ illustrate the integration of physical guidance or physical constraints in DNN training or providing prior physical information for data-driven methods, as elaborated in Sections 3.3.2, 3.4, and 3.5. In this section, we briefly outline the basic ideas of how to develop PXDL algorithms for SAR based on Fig. 3. The specific implementation cases are given in Sections 4 and 5.

3.1 Improving the parameterization of the physical model by DNN

A moving platform is equipped with radar sensors to emit electromagnetic wave signals that interact with targets to generate echo signals that are received by the sensor. Then, two-dimensional complex-valued SAR image data are obtained by the imaging system’s processing. The imaging results are closely related to the physical properties of the scene/target and the working parameters of the sensor and platform. In application scenarios such as target recognition, the electromagnetic scattering model is typically used to simulate targets for data augmentation or to aid in target recognition[39,40]. Both electromagnetic simulation and parametric modeling of electromagnetic scattering require a number of crucial parameters to be determined, which is a typically difficult process. In similar circumstances, mapping rules can be learned automatically by DNNs with large volumes of observational data, which can be implanted into the physical model to optimize parameter selection.

3.2 Simulating the nonlinear physical process by DNN

Many complicated nonlinear physical processes have high computational complexity and model errors. With the development of Graphics Processing Unit (GPU) hardware acceleration and parallel computing, multi-layer stacked DNNs have an efficient forward inference speed and a robust capacity to fit nonlinear models. Therefore, some nonlinear physical processes in SAR, such as SAR image formation and SAR electromagnetic simulation shown in Fig. 3, can be directly simulated by DNNs. It will also contribute to the realization of SAR imaging and interpretation integration[41]. Notably, the constraint of theoretical knowledge must be taken into account during the learning process to prevent the network from producing outcomes that violate physical laws.

3.3 Mutual substitution of DNN and physical model

3.3.1 Substituting the neural network with a reliable physical model

In the field of image processing, Chan et al.[42] proposed PCANet architecture for image texture feature extraction and classification and constructed filters based on cascade principal component analysis to replace the previous convolution layer to simplify DNN parameter learning. This architecture has also been applied for SAR image interpretation[43,44]. We believe that it will motivate us to develop PXDL approaches for SAR. On the basis of physical models with sufficient theoretical basis, such as the polarization decomposition model for fully polarized SAR images[45,46], sub-aperture decomposition model based on Fourier transform[47], and attribute scattering center model to describe targets[48], the physical scattering characteristics of two-dimensional complex-valued images output by SAR imaging system can be analyzed and interpreted. These physical models themselves can provide interpretable feature representations and replace a portion of the neural network layers, thus providing meaningful priors and reducing the number of network parameters that must be learned.

3.3.2 Substituting an incomplete physical model with a DNN

When the physical model is insufficient or no comprehensive theoretical foundation exists, the microwave scattering characteristics cannot be fully defined and explained. For example, the polarization decomposition of dual-polarization or single-polarization SAR images is less effective in describing the polarimetric characteristics of targets[49]. DNN can be used to proactively discover the potential association between targets and polarimetric properties by learning from massive amounts of data. The outcomes of data-driven learning can compensate for the inadequacy of human cognition. However, this method is susceptible to data bias and has poor generalization potential. Also worth considering is whether the learning results can be understood by humans and whether they can effectively support interpretation tasks.

3.4 DNN guided or inspired by a physical model

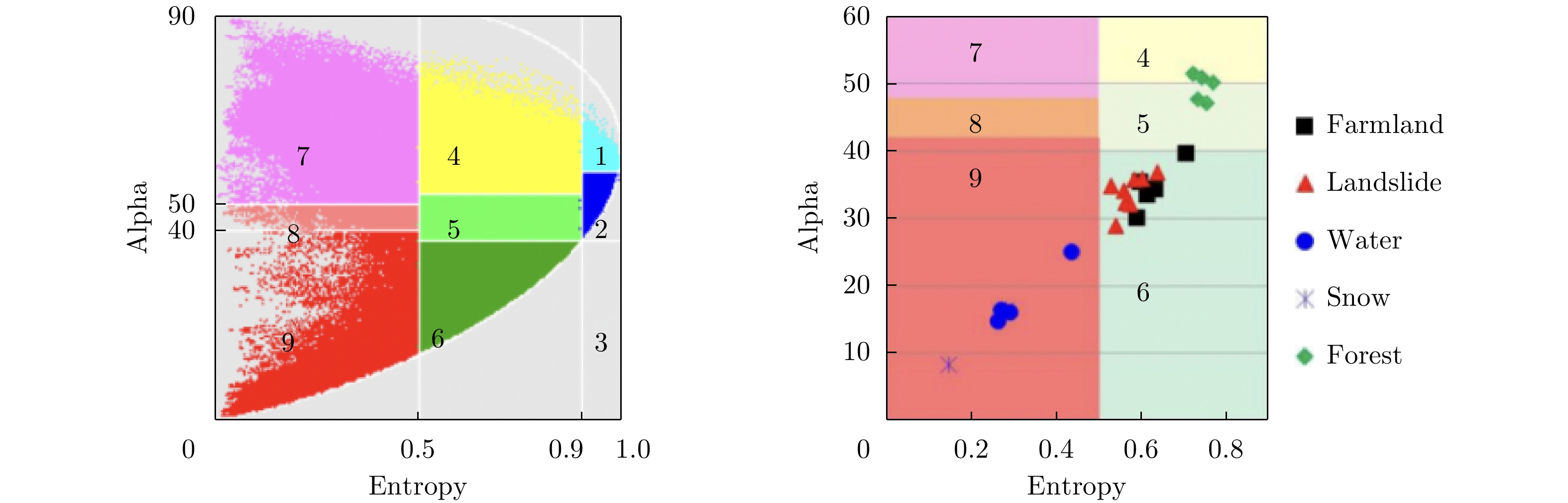

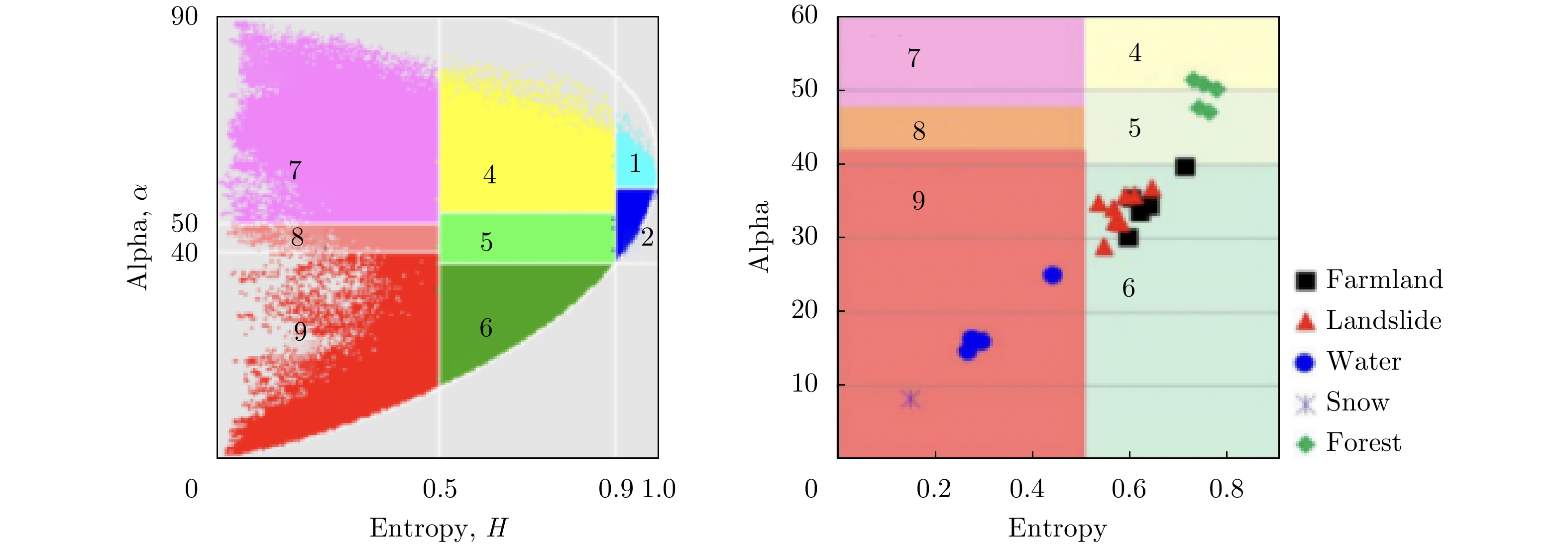

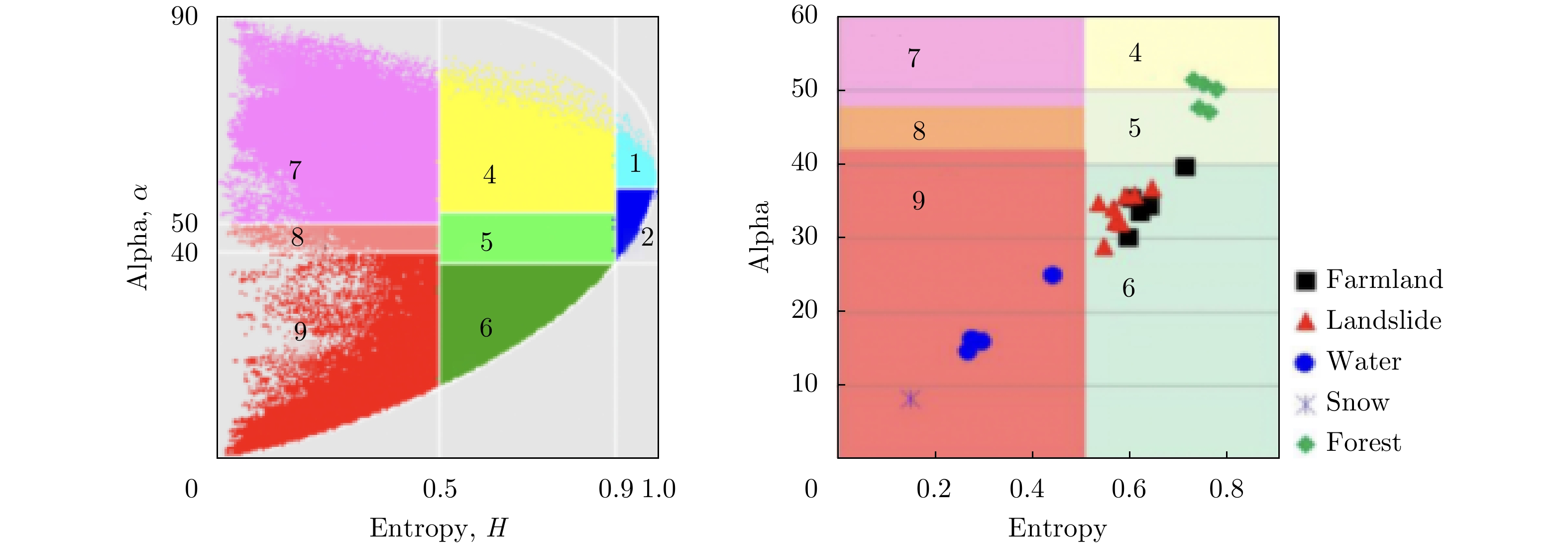

Traditional DCNN-based methods are mostly applied to SAR amplitude images, where the hierarchical feature representation is obtained by stacking convolution layers whose high-level features have a specific semantic meaning. In addition, interpreters can infer the types of ground objects based on the physical scattering characteristics; that is, the physical scattering characteristics also contain semantic information. Fig. 4 shows the H/α plane of quad-pol SAR and the distribution of some selected land-use and land-cover samples in the H/α plane[50]. On the basis of the potential relationship in semantics, we can design a PGNN that builds an unsupervised learning loop by using massive SAR images and their scattering characteristics to enhance the model generalization ability. This PGNN guides the model to learn high-level semantic feature representation with physical perception ability. The theoretical knowledge offered by the physical model can also inspire the design or initialization of the DNN model so that the network parameters themselves have physical meanings.

Figure 4. The H/

Figure 4. The H/α plane for full-polarized SAR data and the selected land-use and land-cover samples distributed in Ref. [50]3.5 DNN decision-making restricted by the physical model

The lack of annotated samples is a common challenge in many typical applications, such as SAR target recognition. End-to-end CNN training can directly predict the semantic labels of SAR images. Nevertheless, small annotation makes finding a good generalized solution in DNN optimization challenging. With the physical scattering properties of SAR considered as additional information or as a constraint during training under the condition of limited samples, the learning cost can be greatly reduced, and the model’s generalizability can be enhanced.

For the core of SAR images to be investigated fully, the aforementioned procedures primarily entail the processing of complex data; therefore, constructing complex forms that correlate to the prevalent DNN architectures is important. Notably, the five aforementioned types of PXDL schemes for SAR select only the five modules in Fig. 3 as examples to elaborate, but in reality, different PXDL schemes can be adopted for the same task, and different schemes can also be integrated into one algorithm implementation. The next two sections will review the current research status in terms of signals and characteristics, semantics, and applications, respectively.

4. PXDL in SAR Signal and Characteristic Understanding

In the understanding stage of SAR signal and physical properties, empirical or theoretical models for processing or analysis have been developed based on the physical nature of SAR. With the rapid development of DL technology, a growing number of scholars have recently begun to focus on how to employ data-driven advantages to compensate for the deficiencies of present theoretical and empirical methodologies.

4.1 SAR image simulation

The aim of SAR image simulation is to simulate scenes and targets under different imaging conditions, and the simulated samples can contribute to subsequent interpretation tasks. Physical model-based electromagnetic simulation of SAR targets has been a significant challenge for decades. The selection of simulation parameters is a vital step that directly affects the resemblance of simulation results to real-world data, with a successful simulation facilitating subsequent interpretation tasks. As described in Section 3.1, DL can be embedded into a physical model to improve the parameterization. Several typical examples are introduced here. Niu et al.[51] proposed to use different DNNs to learn simulation parameters from real SAR images, thereby enabling autonomous parameter setup and enhancing the similarity between simulation results and real samples. In addition, Niu et al.[52] incorporated a DNN into the electromagnetic simulation system to learn the electromagnetic reflection model from the real SAR image while keeping the imaging model unchanged; that is, the DNN was used to improve the calculation of the electromagnetic reflection coefficient, thereby significantly improving the quality of the simulations.

Currently, a more common approach is to totally achieve SAR image simulation with a DNN, as described in Section 3.2, which is typically implemented using a Generative Adversarial Network (GAN)-based technique. In the initial stages, GAN-based SAR image simulation was still based on relevant technologies in the field of computer vision[53]. Eventually, subsequent research began to consider the physical rules that must be observed when generating SAR images. For instance, using conditional GAN to incorporate physical parameters, such as category, azimuth angle, and depression angle, Oh et al.[54] proposed PeaceGAN based on multi-task learning to generate SAR images and complete the target pose estimation and classification, with the azimuth angle that would influence the imaging results of SAR targets taken into account. Similar studies can be found in Refs. [55-57]. On the basis of Conditional Variational Auto-Encoder (CVAE) and GAN, Hu et al.[58] constructed an SAR target generation model with an explainable feature space. The GAN-based generation model can output an SAR target with a given category and observation angle. The CVAE-based feature space provides a continuous feature representation with changing azimuth angles available for target recognition.

In general, the studies[51,52] retain the physical process of electromagnetic simulation, and the DNN is used to improve the submodules of the physical model, such as parameter selection. Strong generalization performance is achieved by ensuring that the simulation results have physical consistency, hence enhancing the quality of the simulation image. This field has a great deal of room for improvement in the future. GAN-based SAR image simulation methods[53-58] have obvious advantages in computational complexity, operability, and other aspects. However, an urgent challenge in this area is how to guarantee that generated SAR images do not contradict the law of electromagnetic scattering and can be interpreted with physical knowledge. Some research in other fields, such as fluid simulation[59,60], include physical parameters in GAN to regulate the generated results, where physical equations are embedded in adversarial learning to bring the generated results closer to reality, thus providing references and inspirations for the development of this area in the future.

4.2 SAR learning imaging

Recent research has also focused on incorporating DL technology into SAR imaging systems to enhance imaging quality and computing efficiency. The improvement of imaging quality is conducive to subsequent SAR image interpretation. Meanwhile, Luo et al.[41] proposed the idea of establishing the integration of imaging and interpretation through DL, where the target parameters are learned from echo data to serve SAR image interpretation.

Currently, a research design aims to integrate DL into existing imaging algorithms to improve parametric selection, as summarized in Section 3.1. In ISAR imaging, for instance, Qian et al.[61] noted that traditional Range Instantaneous Doppler (RID) methods that are utilized for maneuvering target imaging suffer from low resolution and poor noise suppression capability. Thus, they proposed a super-resolution ISAR imaging method in which DL assists Time-Frequency Analysis (TFA). DNN is utilized to learn the mapping function between the low-resolution spectrum input and its high-resolution reference signal, which is then integrated into the RID imaging system to achieve super-resolution with clear focusing. Traditional imaging techniques based on compressed sensing require a human pre-definition of appropriate parameters and a significant amount of time for reconstruction using repeated steps. Liang et al.[62] proposed that CNN be combined with the traditional iterative shrinkage threshold algorithm to automatically learn the best parameters in the imaging process to ensure that the algorithm is still physically interpretable.

Other studies apply DNNs to simulate imaging algorithms. The essence is to reformulate the signal processing optimization algorithm as neural networks and train the algorithm parameters using DNN to enhance imaging performance. Many iterative sparse reconstruction algorithms can be unfolded as learnable neural networks, such as the Learnable ISTA (LISTA)[63], Analytical LISTA (ALISTA)[64], and neuro-enhanced ALISTA[65], based on the development of iterative contraction threshold algorithm, as well as ADMM-NET[66] and ADMM-CSNET[67] based on the Alternating Direction Multiplier Method (ADMM). In the field of sparse microwave imaging, Mason et al.[68] first proposed to model the SAR imaging process using a DNN based on the ISTA algorithm and demonstrated that DL has faster convergence and lower reconstruction error than the traditional ISTA algorithm. Many researchers then continued the investigation, such as Refs. [69-72]. Refs. [73-75] studied SAR learning imaging based on ADMM. A recent work[76] presented a brief overview of the current research and pointed out that the interpretability and universality of DL algorithms in SAR imaging applications should be strengthened further in future studies. In general, DL is anticipated to lead to the development of imaging algorithms with greater speed, precision, and resolution, as well as the construction of an intelligent, integrated system for image interpretation, which has enormous potential for future growth.

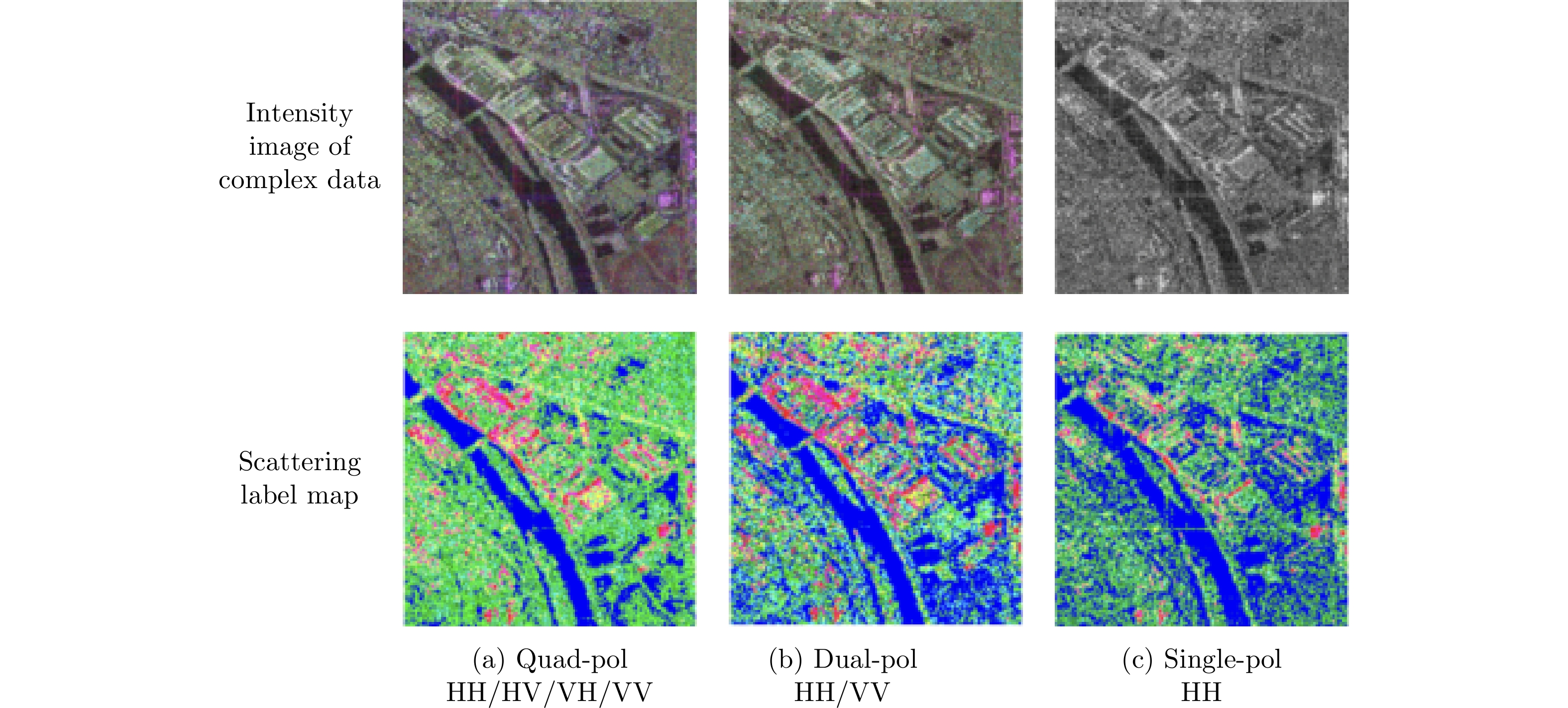

4.3 Understanding physical characteristics

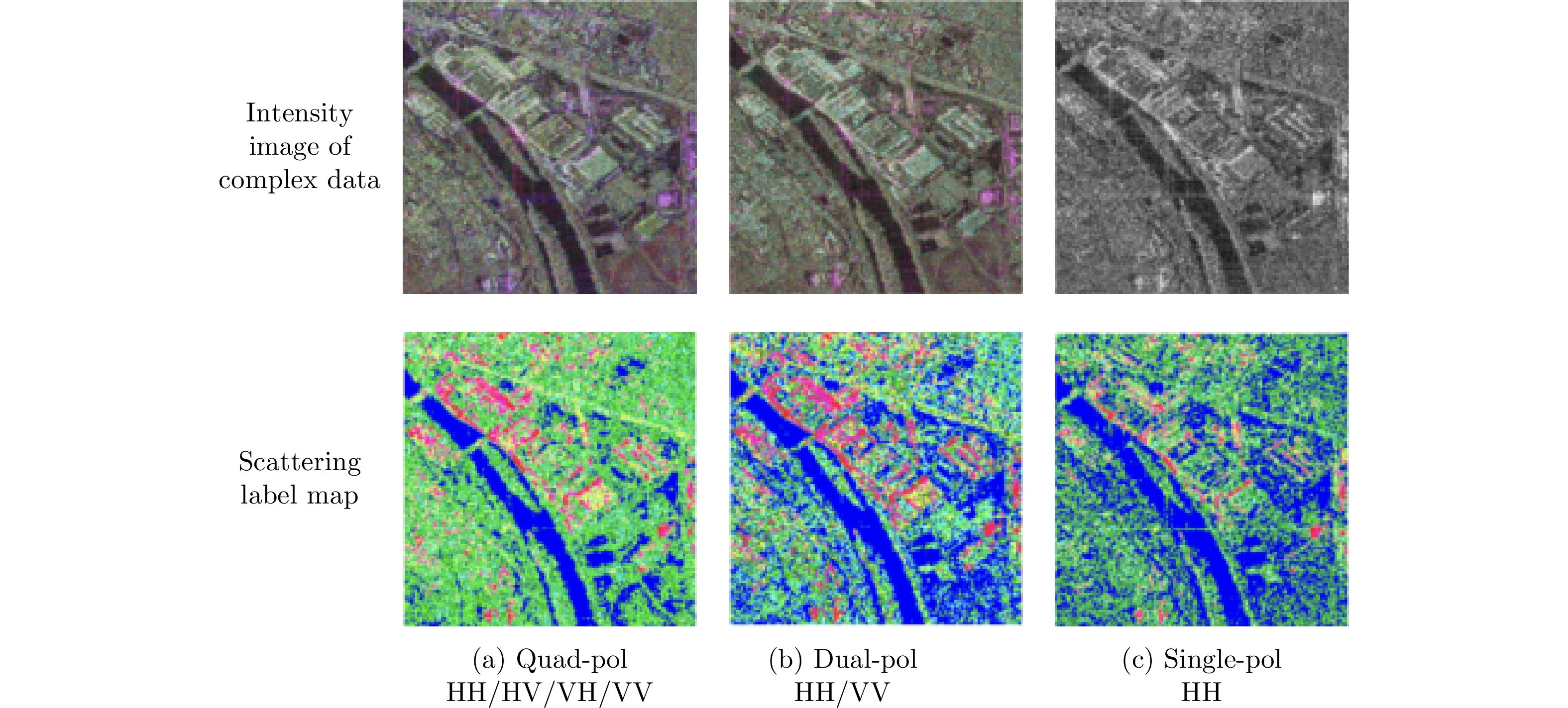

Understanding the physical microwave features of SAR images is crucial for the interpretation of SAR targets and scenes. For example, the polarization decomposition model, sub-aperture decomposition model, and interferometric phase diagram have been widely used in the fields of land classification[77], moving target detection[78], and terrain subsidence monitoring[79]. In some cases, the current physical property analysis model is difficult to use directly. The polarization decomposition of dual-polarization and single-polarization SAR data is less efficient[49], and interferometry has phase noise because of the atmosphere and topography[80]. Currently, some DL technologies have substituted the physical model in the aforementioned fields, employing a data-driven approach to learn the physical properties of SAR, as described in Section 3.3.2.

Polarization information inversion is a typical task that seeks to infer the entire polarization features of the target from partially polarized SAR data. Refs. [81,82] proposed to use DNNs to learn complete polarization information from single-polarized SAR images. Song et al.[81] proposed to employ CNN to extract texture features of single-channel SAR amplitude images and then map them into the polarization feature space through a feature conversion network to retrieve the essential elements in the polarization covariance matrix. A similar study is Ref. [83]. Zhao et al.[82] proposed a complex-valued CNN to learn volume and single scattering transfer to analyze the physical scattering characteristics of single/dual-polarized SAR. All the aforementioned DL methods employ polarization physical models, such as the polarization covariance matrix[81,83] and the Cloude-Pottier decomposition[82], to generate the ground truth for supervision, thereby allowing the target polarization scattering characteristics of single-channel SAR images to be described.

SAR image colorization is one of the main applications in this direction. Notably, a major research branch of SAR image colorization is mainly analogous to image colorization for grayscale pictures[84] or style transfer[85] in the field of computer vision. It focuses primarily on how to assign colors to a single-channel grayscale SAR image or convert it to RGB optical remote sensing image format to aid visual interpretation by humans[86]. However, the physical consistency of SAR is not guaranteed. Refs. [81-83] focuses more on the acquisition of polarization information through DL, where SAR image colorization is achieved via Pauli decomposition such that the colored results have physical meaning.

The sub-aperture decomposition of SAR image along azimuth has been widely applied in research on moving target detection and coherent target detection[87,88]. Spigai et al.[89] proposed a two-dimensional TFA for complex-valued SAR images to model four canonical targets. Ferro-Famil et al.[90] modeled non-stationary targets by examining the azimuth-dependent polarization properties. Such empirical models were unable to adequately generalize all complicated SAR targets in the wide-area scenario. To address this issue, Huang et al.[91] proposed an unsupervised learning method to automatically mine the target backscattering variation patterns and extend them to polarized SAR data[92] (as shown in Fig. 5). In this way, the empirical knowledge in Refs. [89,90] was verified and improved through a data-driven approach.

Figure 5. The unsupervised learning results of different polarized SAR images based on TFA and pol-extended TFA models[92]

Figure 5. The unsupervised learning results of different polarized SAR images based on TFA and pol-extended TFA models[92]The physical characteristics learned by DNN should fit with the physical nature of SAR and be physically interpretable. According to Song et al.[81], the covariance matrix of the prediction from single-polarization SAR images should meet the semi-positive definite constraint. The target scattering types learned with unsupervised learning[91] should cover the four typical targets proposed in Ref. [89]. De et al.[93] attempted to interpret the results of DNNs and relate their output to the physical properties of SAR.

5. PXDL in SAR Semantic Understanding and Application

Traditional DL algorithms started earlier in semantic understanding and SAR applications than the process of understanding SAR signals and characteristics. In the past few years, they have established a solid research foundation in automatic target recognition, scene classification, and change detection. Recently, an increasing number of researchers have been focusing on how to integrate the benefits of physical models with DL techniques.

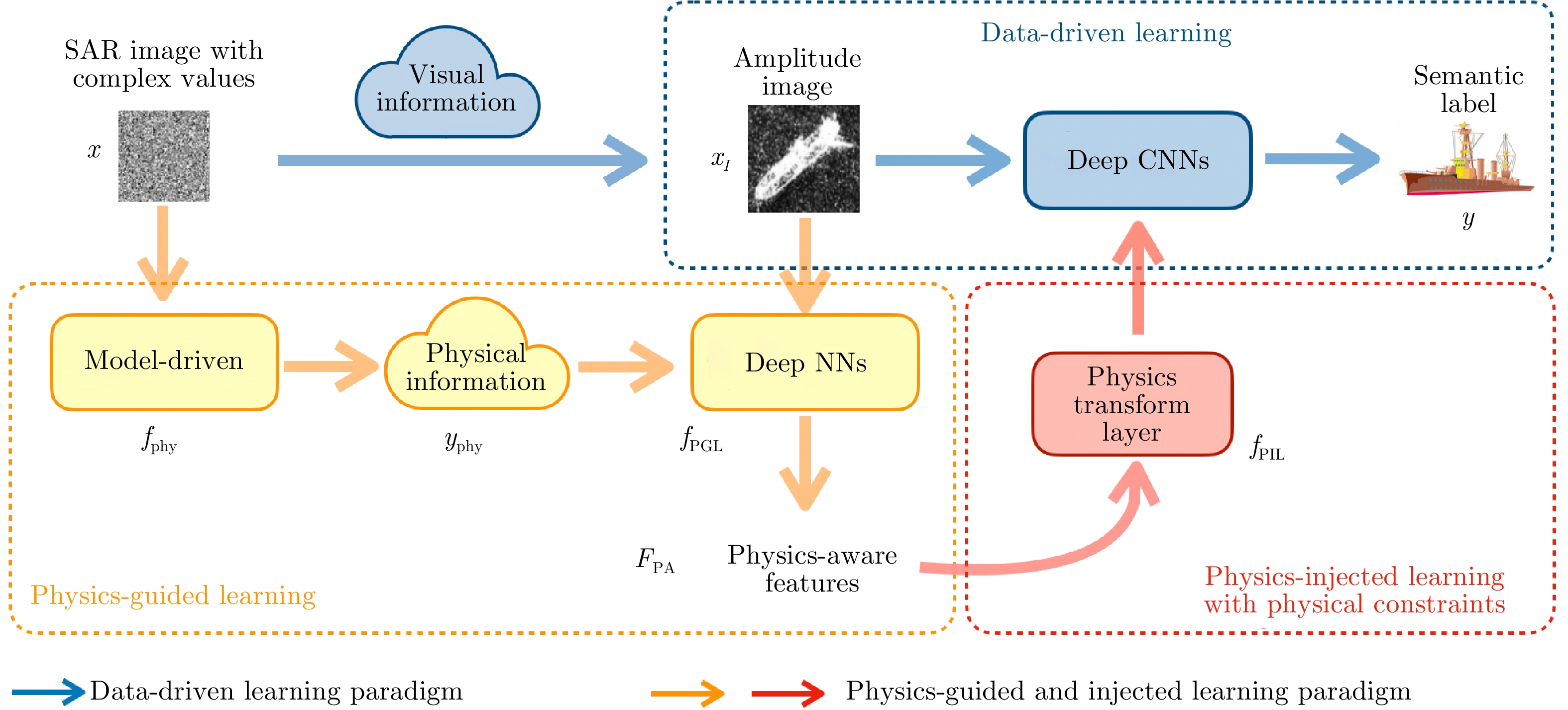

5.1 Physics-guided and injected learning

This section introduces a novel DL paradigm for SAR image semantic understanding tasks based on the method proposed in our recent work[94].

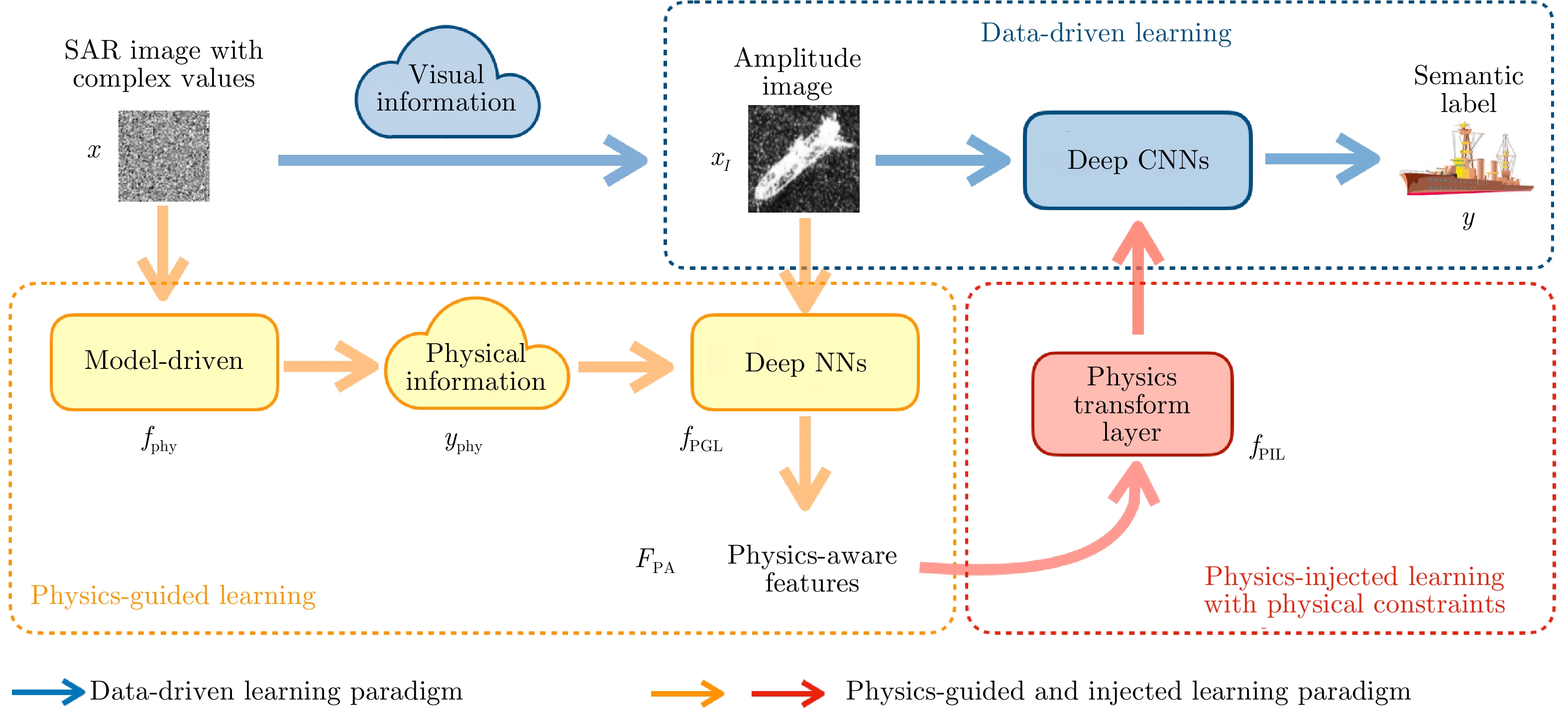

We denote the complex-valued SAR image as x and the semantic label as y. The traditional data-driven learning paradigm constructed the end-to-end DNN mapping f, which takes x (or mostly the amplitude information

xI ) as the input and outputs the semantic label y, denoted asf:x→y . The input data determined that the neural network parameters should be in real or complex form. Training mapping f requires a large number of labeled samples(x,y) . When dealing with limited annotation, a common practice is to employ transfer learning and other optimization techniques, which will not be elaborated upon here. This paper will investigate the use of physics knowledge to lessen the dependence of DNNs on labeled data. The Physics-Guided and Injected Learning (PGIL) introduced here relies on the concept presented in Sections 3.4 and 3.5, as shown in Fig. 6.The physical model

fphy is assumed to take SAR image data x as input, and the output is written asyphy . For the SAR image,yphy can represent attribute scattering center[95], polarization scattering characteristic, and sub-aperture decomposition result[11]. The PGNN proposed in Ref. [32] combinesyphy and observations to form hybrid physical data as network input for prediction, that is, learning mapping.f:{x,yphy}→y (1) This method can be simply summarized as multimodal fusion learning. Previous studies realized fusion at the data[96], feature[95], and decision levels[97] in SAR image classification or target recognition.

The presented PGIL paradigm differs slightly from the fusion strategy described previously. It consists of two phases: unsupervised Physics-Guided Learning (PGL) and supervised Physics-Injected Learning (PIL)

fPGL:{xI,yphy}→FPA (2) fPIL:{x,FPA}→y (3) PGL employs the knowledge provided by the physical model to drive DNN training without annotations to acquire the semantically discriminating feature representation

FPA with physical perceptive capacity. Unlike the process whereyphy is used directly as ready-made fusion information,FPA obtained by PGL guided withyphy is in the form of a feature map, which is more adaptive and closer to the high-level semantics of SAR images and can assist target tasks efficiently. The robust generalizability ofFPA is ensured by the unsupervised training mode’s capacity to make full use of large-scale training samples. In PIL,FPA is injected into the traditional data-driven network by designing a feature transformation layer, and supervised learning is performed with a few label samples. The physical knowledge in PGL can serve as the constraint term of the objective function via the integrated network to restrict network training. Multimodal fusion learning described in Eq. (1) can be considered a special case of Eq. (3). Readers can also refer to the relevant work of multimodal feature fusion[98,99] to design the PIL feature injection method.5.2 SAR image classification

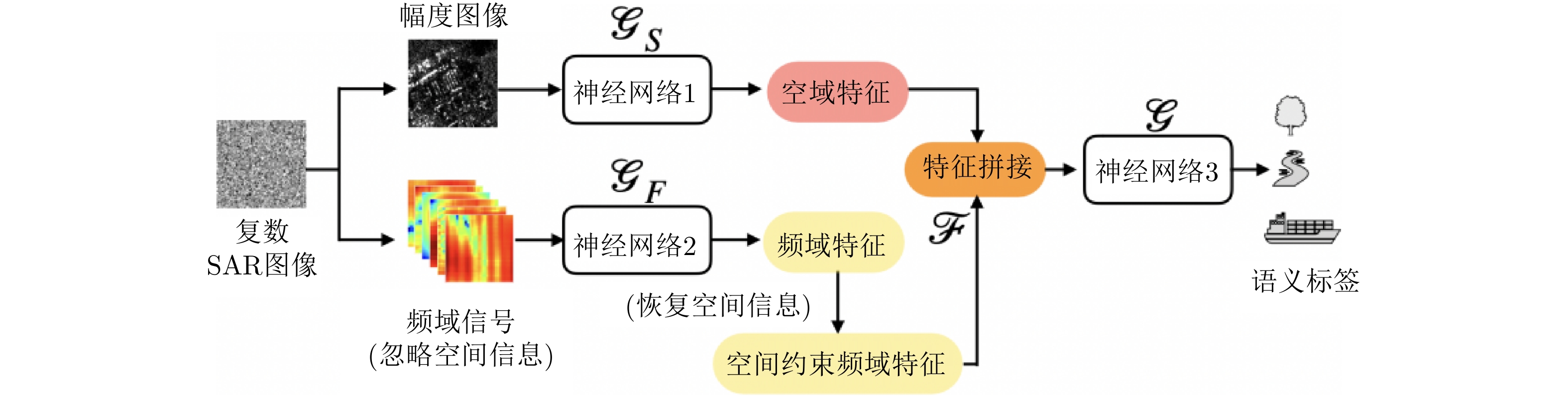

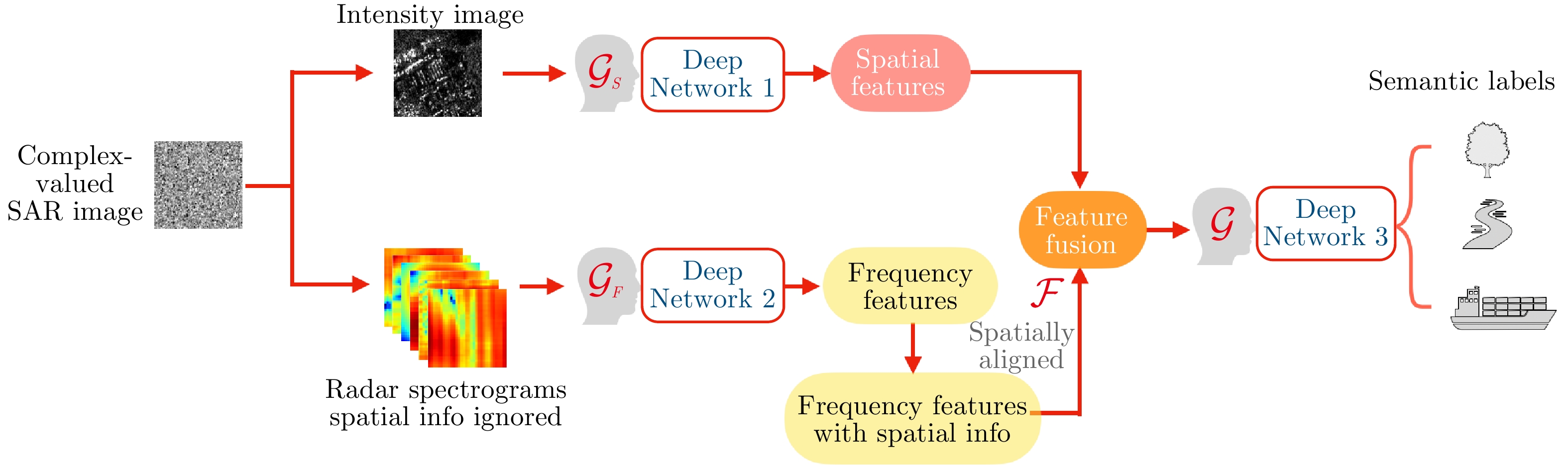

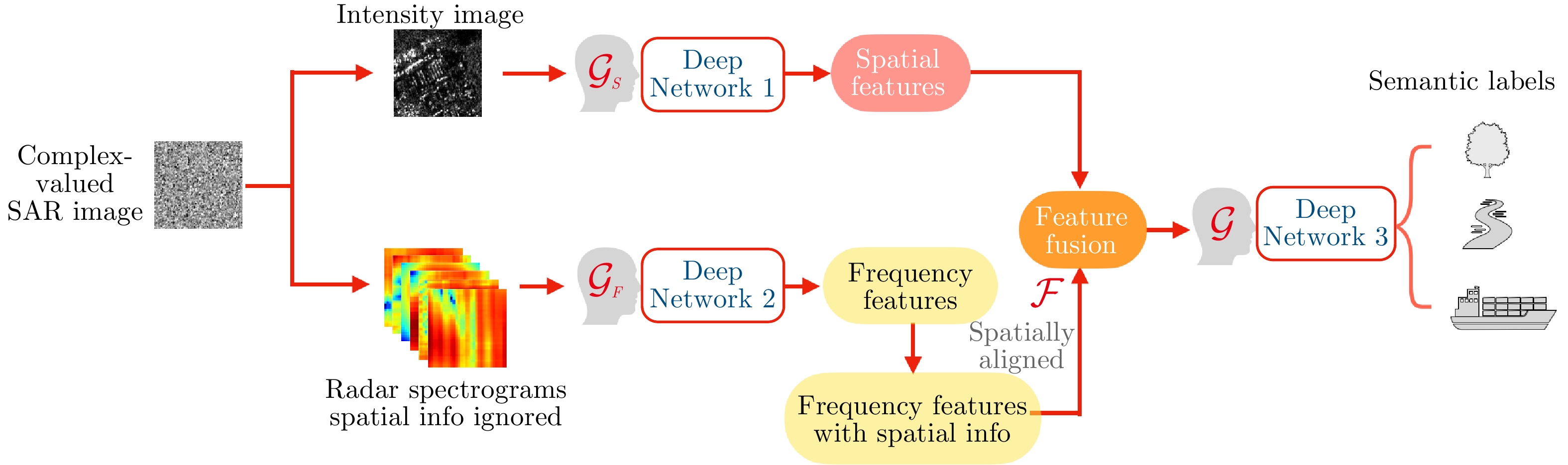

We have conducted significant research on the SAR image classification problem based on the aforementioned PGIL learning paradigm, which will be reviewed in this section. Deep SAR-Net (DSN)[11] extends the sub-aperture decomposition of complex SAR images to the continuous two-dimensional frequency domain space to obtain a high-dimensional time-frequency “hyper-image.” The spatial-frequency domain feature fusion was proposed for SAR image classification, as shown in Fig. 7. Unlike the Complex Convolution Neural Network (CV-CNN), which directly acts on the complex image to learn the mapping from the complex domain to semantic labels, the image decomposition based on the TFA theory is equivalent to replacing a part of the neural network layer of CV-CNN to obtain the interpretable feature expression, as described in Section 3.3.1. NN-2 in Fig. 7 was initialized by unsupervised pre-training to obtain the spatial-constrained frequency features, that is, to achieve Eq. (2). Eq. (3) is realized by subsequent feature fusion and label prediction. The experimental results verified that DSN is superior to traditional CNN, especially when only a few annotated samples are available. The overall accuracy of DSN can be improved by 8.58%. For man-made targets, it improved by 14.06%. The performance is also greatly improved compared with that of the data-driven CV-CNN under the condition of small samples.

Figure 7. The SAR image classification framework Deep SAR-Net (DSN)[11]

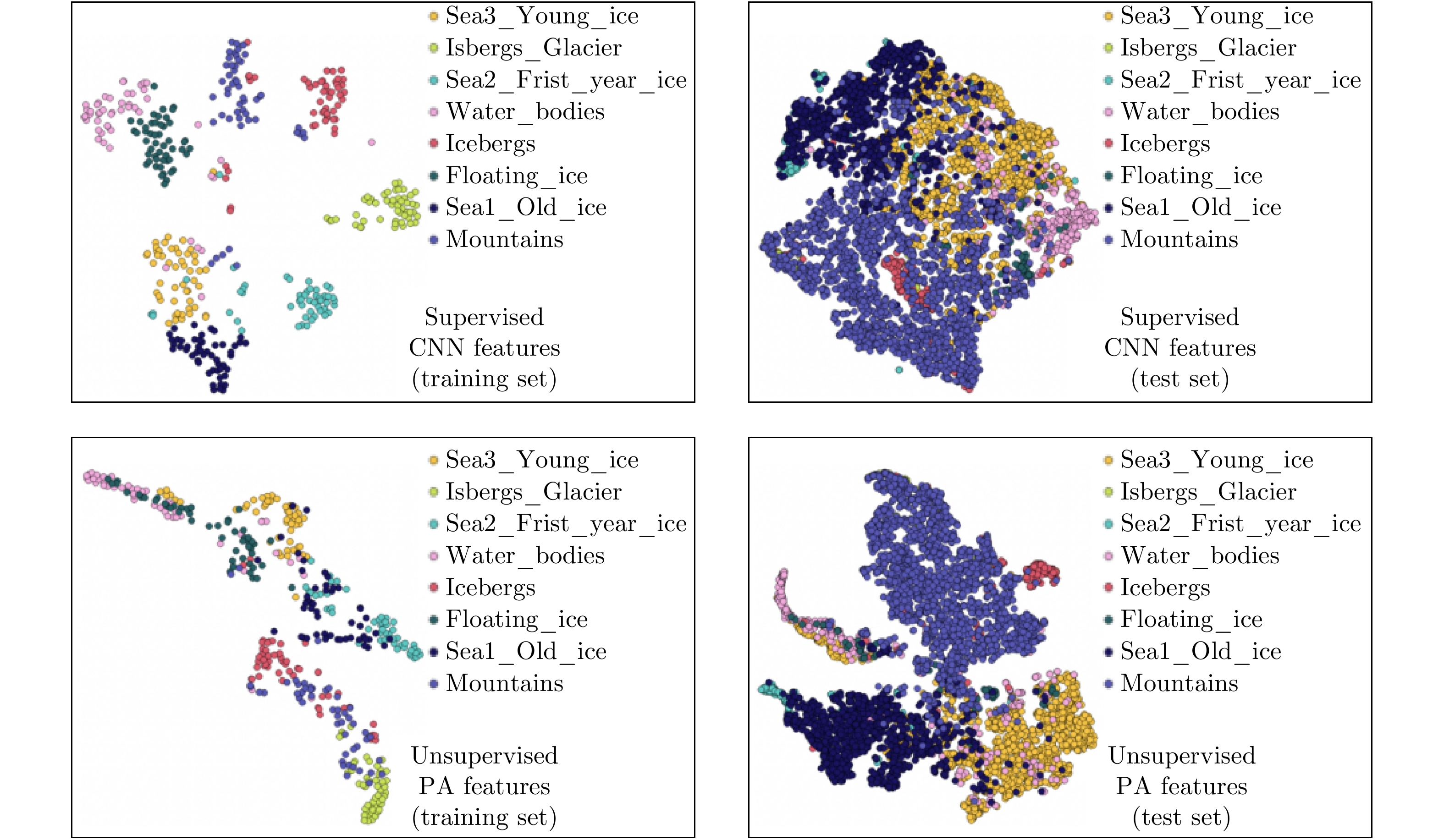

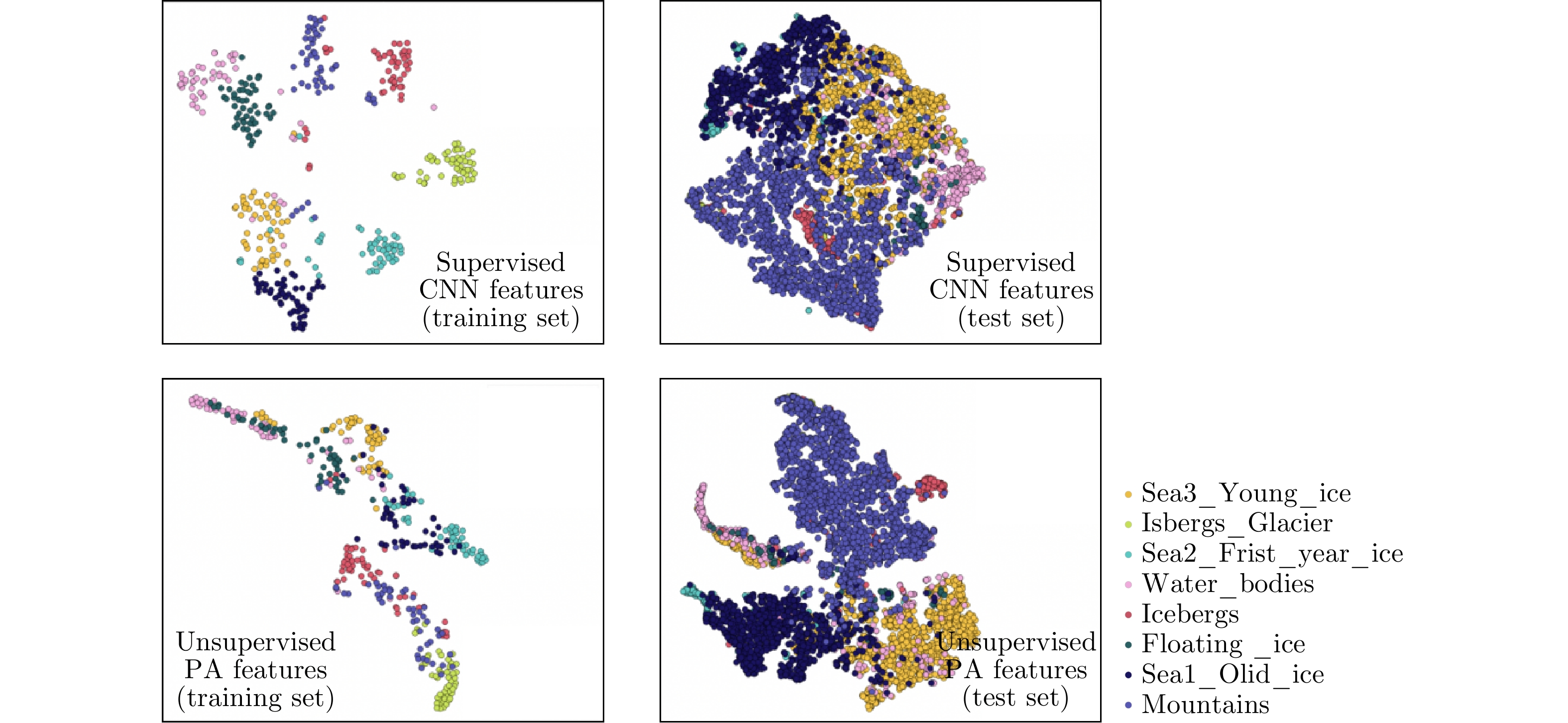

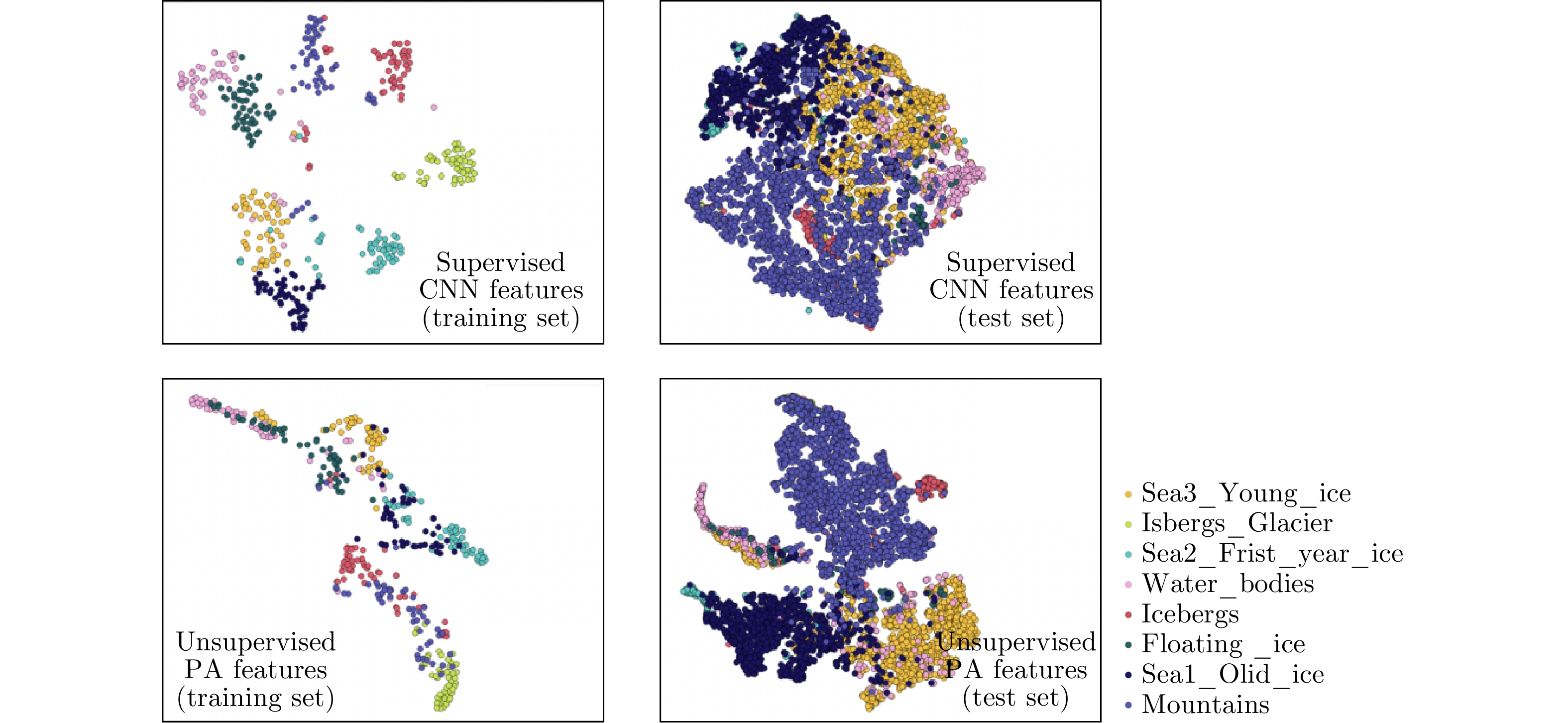

Figure 7. The SAR image classification framework Deep SAR-Net (DSN)[11]Huang et al.[92,100] recently proposed an unsupervised PGL method for SAR scene image classification and polar sea ice type recognition to learn the discriminant semantic feature representation with physical perception ability. This method aims to improve the transparency of the model and enhance the understanding of the physical meaning of SAR images by DNNs. Physical models used for guidance include the entropy-based H/α-Wishart algorithm[101], Kennaugh matrix, geodesic distance-based GD-Wishart algorithm[102], the continuous two-dimensional subband decomposition of single-pol complex SAR image, and the extended version on polarized data[89,92]. The objective function is proposed according to a basic assumption that a correlation exists between SAR physical scattering characteristics and SAR image semantics, as shown in Fig. 4, where the scattering class is highly related to semantics. On the basis of the semantic relationship, a differentiable objective function is designed to guide the neural network to learn features that possess physical perception ability and contain high-level semantic information, completing the process described in Eq. (2). The unsupervised training strategy can make full use of the unlabeled SAR image samples to ensure the generalization performance of the features on the test set. Ref. [94] quantifies the physical perception ability of features, demonstrating that the features learned by PGL have physical constraints that conventional CNN features do not. Fig. 8 shows the feature distribution of the unsupervised physical guided learning method on the training set and the test set[100] compared with features of CNN supervised learning. The features obtained from the PGL can ensure better semantic discrimination on the test dataset.

Figure 8. The feature visualization of the unsupervised physics guided learning and supervised CNN classification on training and test set[100]

Figure 8. The feature visualization of the unsupervised physics guided learning and supervised CNN classification on training and test set[100]Ref. [92] proposed to combine the physical guided network with the small sample learning algorithm for classification in the decision stage, as mentioned in Section 3.5. Ref. [94] designed a multi-scale feature transformation operator to realize Eq. (3), and constraints of physical guided learning were added to the decision-learning process to ensure the physical consistency of classification semantic features. In addition, the authors explained

yphy as the guide signal that drives PGL training[94], evaluated the importance of the physical model in the algorithm, and determined how the final prediction result can be constrained to be physically consistent. The existing defects of the algorithm and the direction for future improvement were discussed by interpretingyphy .5.3 SAR Automatic Target Recognition (SAR-ATR)

SAR Automatic Target Recognition (SAR-ATR) has always received great attention. The classical definition of SAR-ATR includes three steps: target detection, discrimination, and recognition. On the basis of end-to-end DNNs, SAR-ATR is generally divided into two parts: target detection and target recognition.

The Attribute Scattering Center (ASC) of SAR targets is widely used in traditional SAR-ATR. On the basis of geometric diffraction theory and physical optics theory, ASC employs a set of parameters to characterize the electromagnetic and geometric characteristics of the target, which can accurately depict the physical properties of the SAR target[48]. Some recent advanced research integrated the ASC model with DL for SAR target recognition.

Some research follows Eq. (1) for feature fusion. For example, Zhang et al.[95] proposed a two-way FEC learning framework, which transformed a parameterized representation of attribute scattering center into bag-of-words features fused with a CNN feature map. Li et al.[103] transformed ASC into several component feature maps with definite physical significance and fused them with the global features of CNN to efficiently capture the local electromagnetic characteristics of the target. Ref. [104], similar to Ref. [103], conducted partial convolution learning for ASC components with a bidirectional recurrent neural network. Liu et al.[105] regarded the amplitude and phase of SAR target images as multiple modalities and proposed multimodal manifold feature learning and fusion to achieve target recognition. Under the conditions of limited samples, the performance was enhanced, but the implications of amplitude and phase on the recognition process have yet to be clarified. Refs. [95,103,104] used the geometric information of the scattering center provided by the ASC model to strengthen the DL model’s understanding of SAR targets, which is more helpful in improving the interpretability of the neural network.

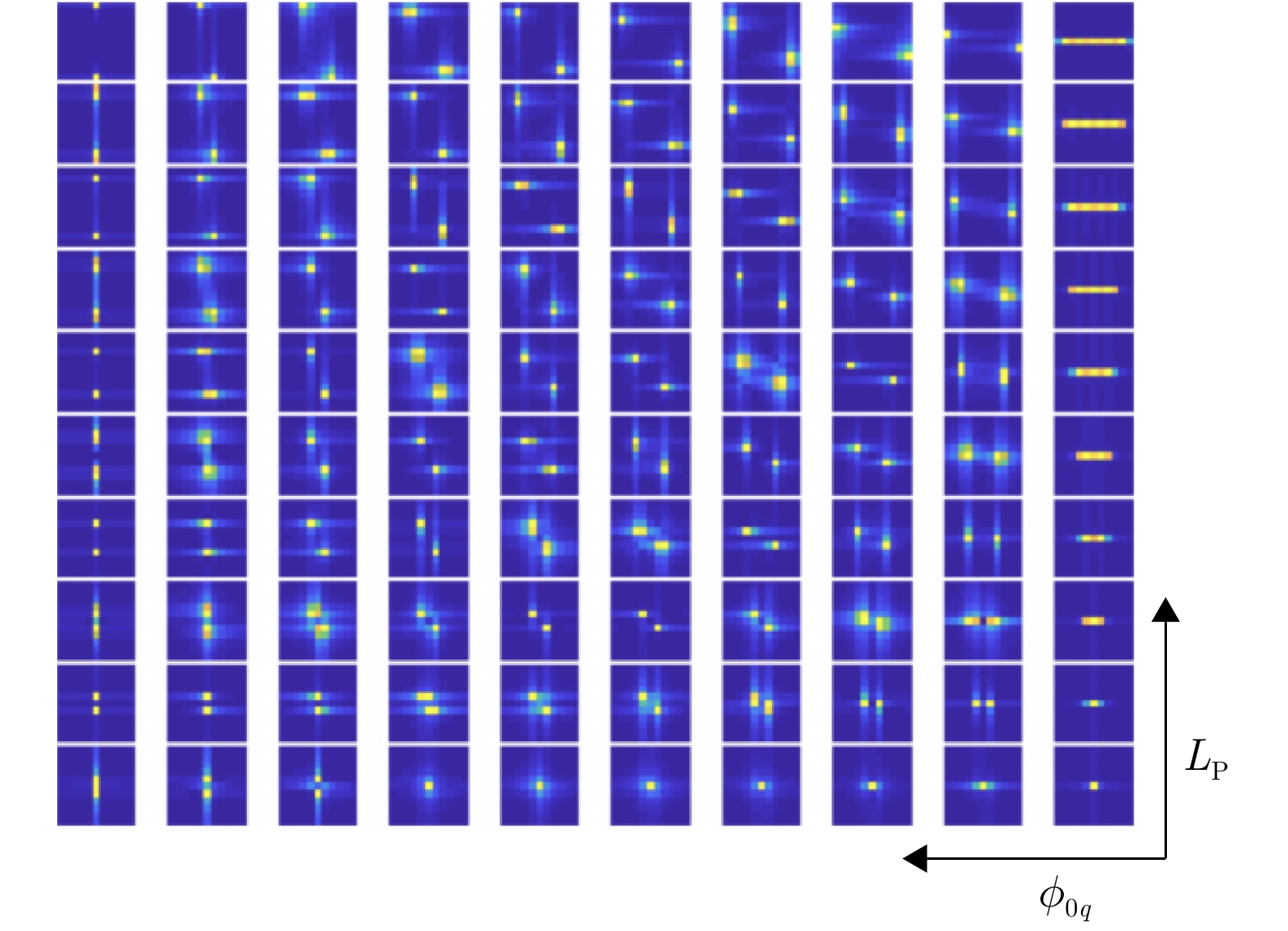

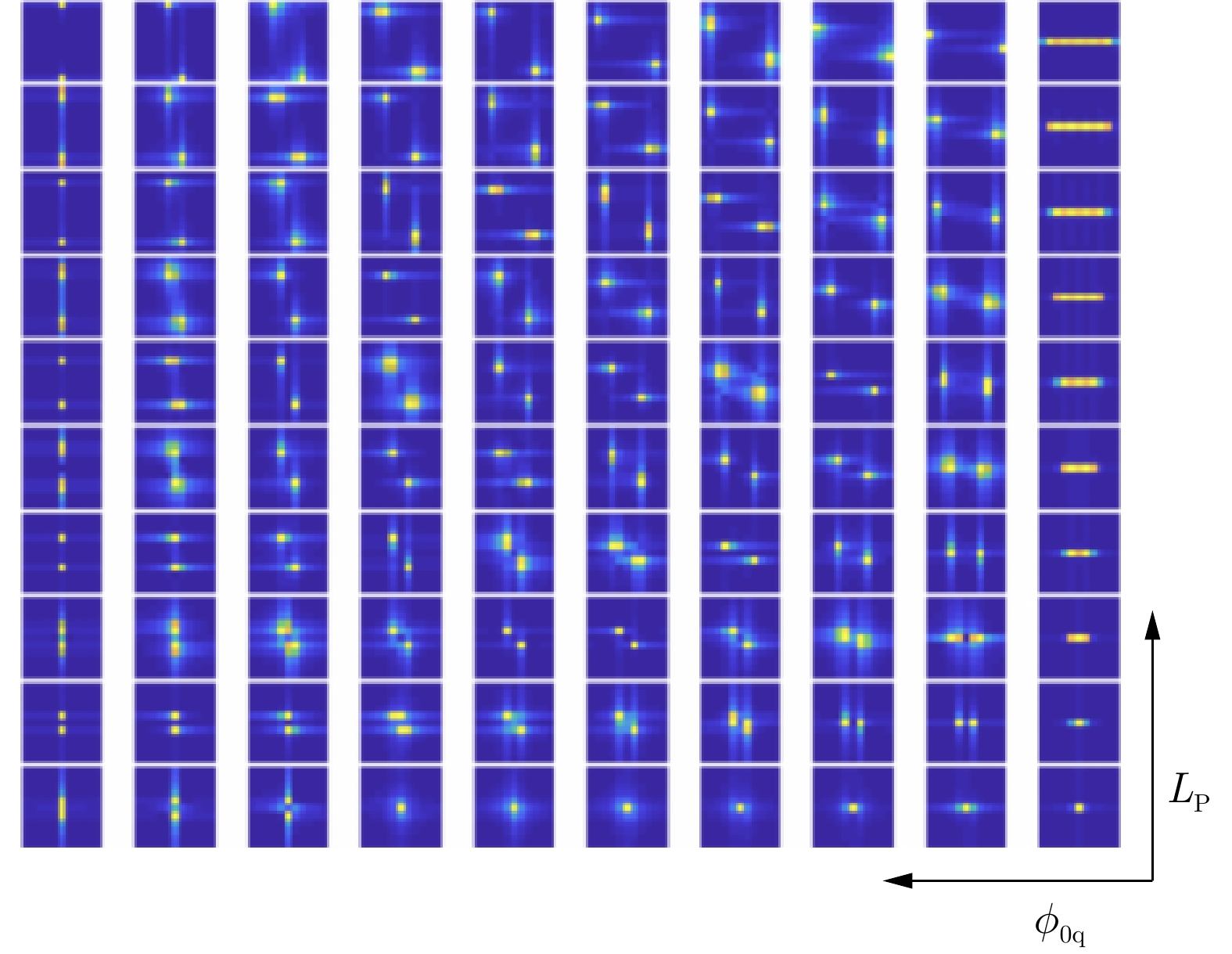

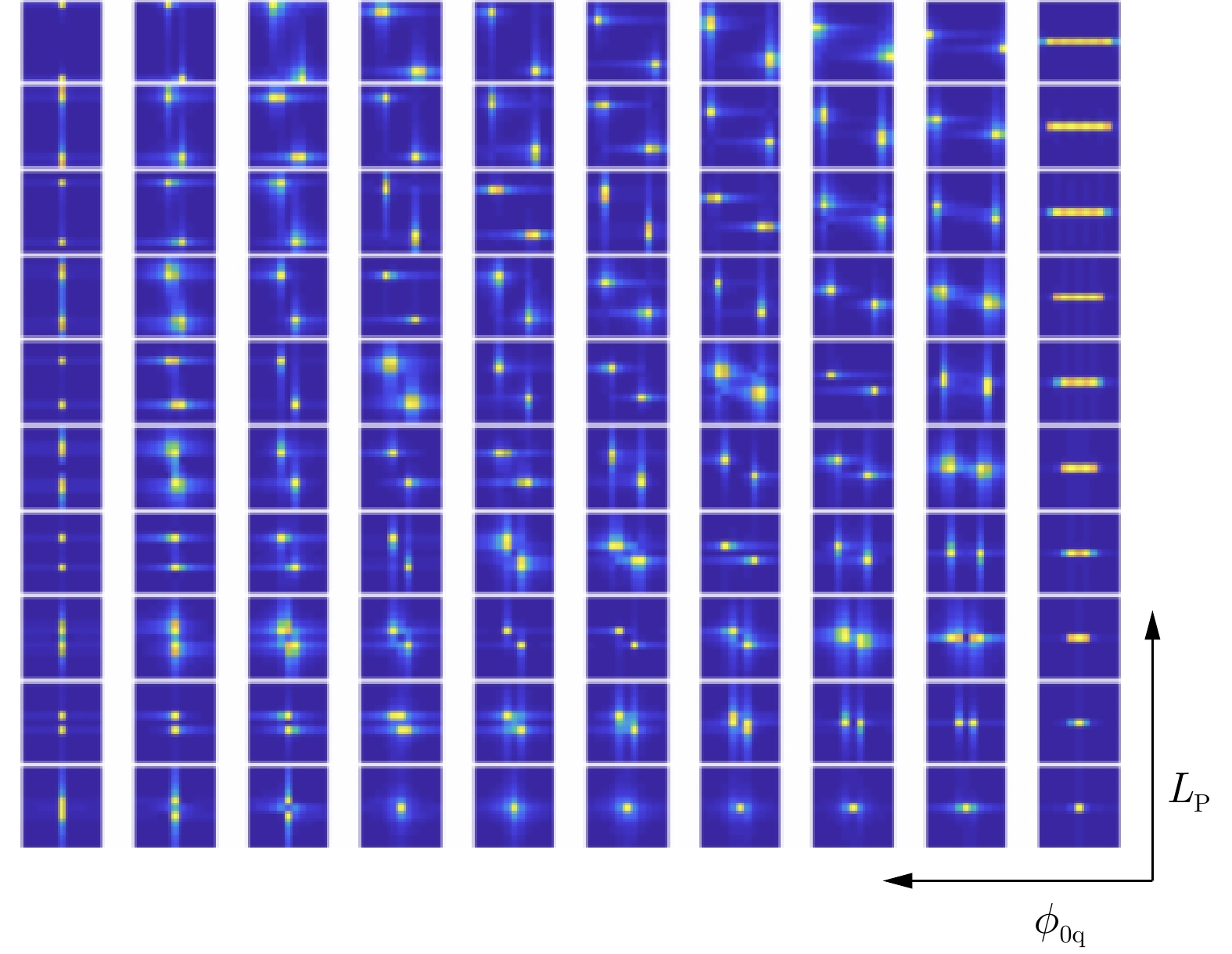

As indicated in Section 3.4, another line of research is the heuristic learning of physical models. The PGNN design embedded the physical principles into neural networks that make it physically interpretable. Liu et al.[106] proposed to transfer the domain knowledge of the ASC model to the first layer’s convolution kernels of CV-CNN, which endowed the initialization of neurons with physical significance. Fig. 9 visualizes the amplitude information of the first layer’s complex convolution kernels based on the ASC model[106]. The horizontal axis denotes the azimuth angles ranging from 0° to 90° with 10° intervals, and the vertical represents different lengths of the scattering center. Compared with randomly initialized neurons, this method not only speeds up the network optimization significantly but also provides deep network interpretability to obtain the hidden layer feature representation with physical meaning. Similar work, such as the polarimetric rotation kernel proposed by Cui et al.[107], adaptively learns polarimetric rotation angle in CNNs. With regard to how physical knowledge is used in neural network design, readers can consult relevant studies from other disciplines[108,109].

Figure 9. The amplitude images of convolution kernels in the first layer of CV-CNN based on ASC model initialization[106]

Figure 9. The amplitude images of convolution kernels in the first layer of CV-CNN based on ASC model initialization[106]Common research concepts for SAR target recognition also include transfer learning and domain adaptation. Some past studies proposed transfer learning and domain adaptation methods from SAR scene images[9], natural images, and optical remote sensing images[8,10] to SAR target recognition. In 2017, Malmgreen-Hansen et al.[39] proposed for the first time to use simulated SAR targets as source data to learn real SAR target classes. However, SAR targets are sensitive to imaging parameters, such as azimuth angle and wavelength, which will greatly affect the morphological structure in SAR images. To guarantee that the pre-trained model can properly recognize SAR objects under real imaging settings, physical perception must be provided. Recently, He et al.[110] applied domain adaptation to narrow the difference of high-level features among the simulated SAR targets under different imaging conditions to ensure that the pre-training model has the ability to identify various SAR targets. In Ref. [111], the simulated SAR targets are used as the source data in few-shot learning for SAR target recognition. Data augmentation is conducted with SAR domain knowledge related to the azimuth, amplitude, and phase data of vehicles. Similarly, Agarwal et al.[112] proposed to augment SAR data by azimuth interpolation based on a physical model to support the training of DL algorithms.

Common public datasets for target recognition, such as the Moving and Stationary Target Acquisition and Recognition (MSTAR)[113] and OpenSARShip[114], provide original complex data, which are helpful in conducting research on PXDL and integrating physical models such as attribute scattering centers into data-driven methods. However, the public datasets oriented to SAR target detection basically provide only amplitude images, such as AIR-SARShip[115]. DL-based target detection mostly comes from computer vision and is performed in the image domain (for details, please refer to the review article[116]). This issue is another significant obstacle that restricts the development of DL methods for physically explainable object detection. Lei et al.[96] proposed a features-enhanced DL method based on a complex SAR ship target detection dataset with a rotated bounding box, which combines the scattering results of sub-aperture decomposition with amplitude information into the input network for learning. There is still a great deal of room for further development for advanced SAR target detection datasets with more comprehensive information or the PXDL detection methods.

5.4 SAR image semantic segmentation

SAR image semantic segmentation aims to assign a semantic label to each pixel in the SAR image. Because of the complex background, speckle interference, discontinuity of target morphology, and other phenomena, producing satisfactory results in SAR images using a DL segmentation method in computer vision is challenging. In the early stages, pixel-level SAR image classification was often performed on Polarimetric SAR (PolSAR) images because the polarimetric feature assisted in distinguishing between target classes with differing scattering characteristics. Later DL algorithms often took the polarimetric feature component as the input to improve the understanding of target scattering characteristics[117-120]. The aforementioned studies can be classified as the concept described in Section 3.3.1.

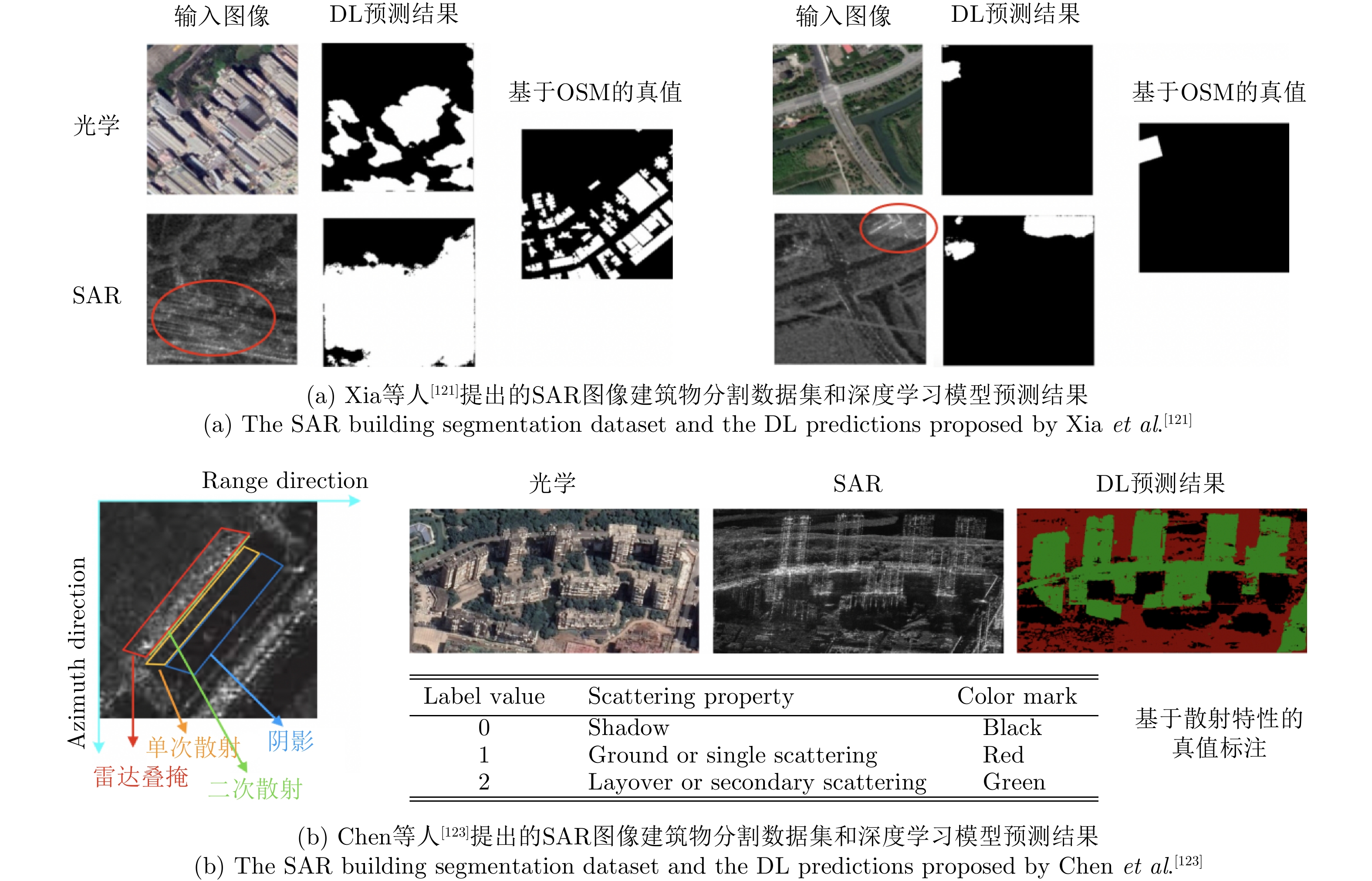

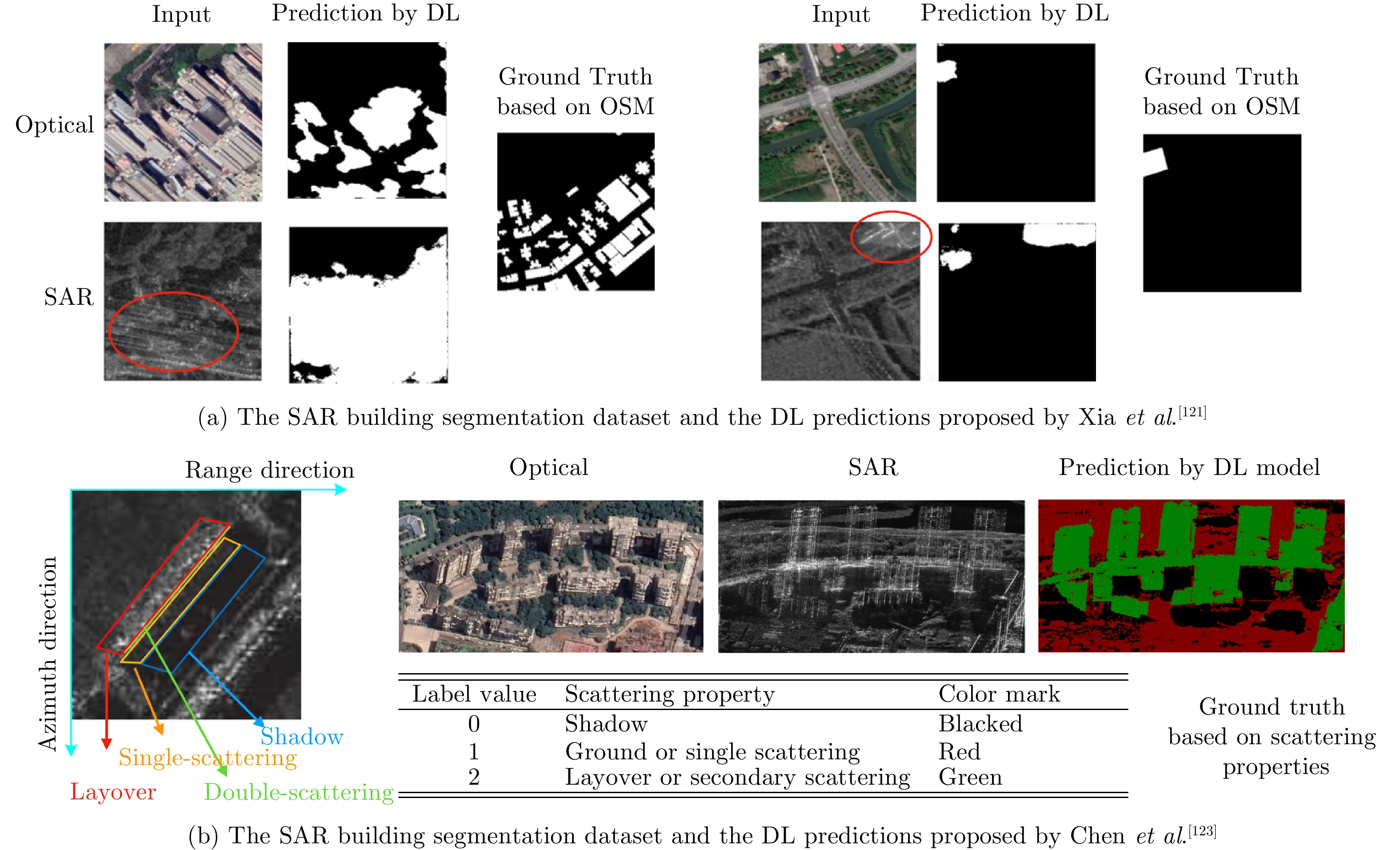

Building segmentation is an important branch of SAR image semantic segmentation and is widely used in the fields of global urbanization monitoring and three-dimensional reconstruction of buildings. We mainly discuss the research progress in this area. Recently, multiple Building Area (BA) segmentation or building segmentation data sets have been proposed[121-125]. BA segmentation aims to annotate large urban BAs based on large-area medium-resolution SAR images, for instance, the GF-3 FSII SAR data used in Ref. [122]. In contrast, building segmentation mainly focuses on building instances in higher-resolution SAR images[121,123-125]. Because of the difficulties of visual interpretation of SAR images, most building segmentation datasets are annotated based on optical images or auxiliary data such as street view (e.g.,., Open Street Map, OSM). Fig. 10(a) demonstrates two annotation cases in the building segmentation dataset proposed by Xia et al.[121] and the prediction results of the DL model. Notably, skyscrapers can be seen clearly in optical images, but they cannot be recognized in SAR images due to complex scatterings. The ground truth provided by OSM reflects the building footprint projected on the ground. Thus, the DL model has difficulty learning the semantic mapping directly from the SAR image to the ground truth. In another case, the optical image showed that no building is present in the upper-right corner. Because of the geometric feature of layover in the SAR image, however, DNN can identify the backscattering of buildings even though the real building is not in the top-right corner. Strictly speaking, what is displayed in the SAR images is not the building itself but the backscattering of electromagnetic waves acting on the target and surrounding environment. The DL model needs physical knowledge to bridge the gap from the backscattering representation to the target semantics.

The InSAR image building segmentation dataset proposed by Chenet al.[123] provides annotations of scattering characteristics of buildings, including either four categories of shadow, layover, single scattering, and secondary scattering, or two categories of layover and shadow, as shown in Fig. 10(b). CVCMFF-Net, a complex convolutional image segmentation network proposed in Ref. [123], uses master and slave images of InSAR as input to establish a mapping from complex SAR images to the basic scattering characteristics of buildings. The segmentation results have explainable physical meaning, which can help in the analysis of the semantic information, location, height, and other information of buildings. Qiu et al.[125] recently proposed an SAR microwave vision 3D imaging dataset based on single-view complex image data, which performed detailed semantic annotation for building instances and retained the layover number information. However, the paper also shows that the semantic segmentation model (such as MaskRCNN) based only on visual information has low accuracy[125], and building segmentation based on this dataset is difficult. More research on PXDL techniques based on this dataset is anticipated to be conducted in the foreseeable future.

6. Future Outlooks

In general, the PXDL for SAR image interpretation is still in its infancy. Most advanced research focuses on the SAR automatic target recognition field due to the early start of DL development in this direction and the support of MSTAR and other public datasets that contain complex data, imaging parameters, and other multidimensional information. In view of some problems and challenges that remain in the current field, future research can be conducted in the following directions:

(1) SAR image interpretation dataset

To promote the further development of PXDL methods in this field that deeply combines with the physical characteristics of SAR, constructing SAR image interpretation datasets with richer information beyond amplitude images is of great significance. Such datasets include SAR target detection and recognition datasets with complex data and imaging information, SAR image classification or segmentation datasets with physical scattering characteristics, and SAR echo datasets.

(2) Physical constraint in data-driven learning

Learning, simulating, or replacing the physical processes via DNNs without considering the physical knowledge of SAR in applications such as SAR target simulation and super-resolution reconstruction is inappropriate. In the future, physical constraints in data-driven learning should be strengthened. The network training should be constrained by punishing results that violate physical laws so that physically consistent prediction results can be obtained. DNN can also be used to model the errors of the physical model to improve the existing physics and theory[29].

(3) Physics-guided learning for SAR

At present, many studies have embedded physics knowledge into neural network models from the perspective of feature fusion, thus realizing the initial attempt at hybrid modeling. To break through the application bottleneck of a small number of labeled samples, a PGNN that promotes unsupervised learning needs to be developed. PGL aims to make full use of a physical model and domain knowledge, as well as a large amount of unlabeled SAR image data, where the objective function or model structure design can be motivated by physical laws. It can automatically mine feature representation with strong generalization ability and physical perception ability. Furthermore, PGL should be combined with optimization methods, such as few-shot learning, zero-shot learning, and meta-learning, to form an SAR-specific small sample DL system.

(4) Interdisciplinary with XAI

Not only should a DL-based SAR image interpretation method achieve faster speed, higher accuracy, and stronger performance across a variety of tasks, but it must also meet practical application requirements such as a more transparent algorithm, more trustworthy results, and greater stability against disturbance. Most of the current studies discussed in this paper concentrate on how to integrate DL with the SAR physics model, mainly focusing on the improvement of model performance after the incorporation of physics knowledge, with few discussions on interpretability. The information and priors provided by the theoretical physical model are anticipated to establish a balance between the algorithm’s transparency and degree of intelligence, thus enabling human–computer interaction. A crucial step is to conduct interdisciplinary research that combines XAI-related theories and technology to advance the PXDL approaches for SAR interpretation.

(5) Combined with uncertainty quantification

Some practical application scenarios of SAR interpretation require reliable prediction results. Although DL methods have achieved high accuracy in some SAR image interpretation tasks, users still cannot trust the predictions of deep models. For example, the physical characteristics of SAR targets are sensitive to imaging parameters, and small perturbations may lead to dramatic changes in the results. In the absence of training samples, users need to perceive the uncertainty of the results predicted by the model, thus giving them confidence as a reference or discarding overly suspicious results. Combining uncertainty quantification with PXDL is conducive to reducing the uncertainty of the predicted results caused by data-driven learning bias by taking advantage of the physical model’s robustness.

7. Conclusions

In the past few years, SAR image interpretation technology based on DL has constantly updated the evaluation metrics for a variety of tasks and produced remarkable results in comparison to model-based methods. The era of post-DL that combines knowledge-driven and data-driven approaches has arrived. The interpretable SAR physical mechanism and the learnable DNN have extensive development opportunities and are complementary. This paper provides an overview of the fundamental concepts of physics-based machine learning and summarizes the challenges and feasibility of developing PXDL methods in the SAR image interpretation field. We review recent cutting-edge research that combines DL and a physical model in the understanding of SAR signal and physical characteristics, as well as semantic understanding and applications, and look forward to future development. The research in this field is not yet mature, and it is anticipated that more professionals and academics from diverse domains will participate, learn from one another, and conduct more in-depth studies on PXDL algorithms for SAR in the future.

-

References