- Home

- Articles & Issues

-

Data

- Dataset of Radar Detecting Sea

- SAR Dataset

- SARGroundObjectsTypes

- SARMV3D

- AIRSAT Constellation SAR Land Cover Classification Dataset

- 3DRIED

- UWB-HA4D

- LLS-LFMCWR

- FAIR-CSAR

- MSAR

- SDD-SAR

- FUSAR

- SpaceborneSAR3Dimaging

- Sea-land Segmentation

- SAR Multi-domain Ship Detection Dataset

- SAR-Airport

- Hilly and mountainous farmland time-series SAR and ground quadrat dataset

- SAR images for interference detection and suppression

- HP-SAR Evaluation & Analytical Dataset

- GDHuiYan-ATRNet

- Multi-System Maritime Low Observable Target Dataset

- DatasetinthePaper

- DatasetintheCompetition

- Report

- Course

- About

- Publish

- Editorial Board

- Chinese

| Citation: | SUN Xian, WANG Zhirui, SUN Yuanrui, et al. AIR-SARShip-1.0: High-resolution SAR ship detection dataset[J]. Journal of Radars, 2019, 8(6): 852–862. doi: 10.12000/JR19097 |

AIR-SARShip-1.0: High-resolution SAR Ship Detection Dataset (in English)

DOI: 10.12000/JR19097 CSTR: 32380.14.JR19097

More Information-

Abstract

Over the recent years, deep-learning technology has been widely used. However, in research based on Synthetic Aperture Radar (SAR) ship target detection, it is difficult to support the training of a deep-learning network model because of the difficulty in data acquisition and the small scale of the samples. This paper provides a SAR ship detection dataset with a high resolution and large-scale images. This dataset comprises 31 images from Gaofen-3 satellite SAR images, including harbors, islands, reefs, and the sea surface in different conditions. The backgrounds include various scenarios such as the near shore and open sea. We conducted experiments using both traditional detection algorithms and deep-learning algorithms and observed the densely connected end-to-end neural network to achieve the highest average precision of 88.1%. Based on the experiments and performance analysis, corresponding benchmarks are provided as a basis for further research on SAR ship detection using this dataset.

-

Keywords:

- SAR ship detection,

- Public dataset,

- Deep learning

-

-

References

[1] 张杰, 张晰, 范陈清, 等. 极化SAR在海洋探测中的应用与探讨[J]. 雷达学报, 2016, 5(6): 596–606. doi: 10.12000/JR16124ZHANG Jie, ZHANG Xi, FAN Chenqing, et al. Discussion on application of polarimetric synthetic aperture radar in marine surveillance[J]. Journal of Radars, 2016, 5(6): 596–606. doi: 10.12000/JR16124[2] REY M T, CAMPBELL J, and PETROVIC D. A comparison of ocean clutter distribution estimators for CFAR-based ship detection in RADARSAT imagery[R]. Technical Report No. 1340, 1998.[3] NOVAK L M, BURL M C, and IRVING W W. Optimal polarimetric processing for enhanced target detection[J]. IEEE Transactions on Aerospace and Electronic Systems, 1993, 29(1): 234–244. doi: 10.1109/7.249129[4] STAGLIANO D, LUPIDI A, and BERIZZI F. Ship detection from SAR images based on CFAR and wavelet transform[C]. 2012 Tyrrhenian Workshop on Advances in Radar and Remote Sensing, Naples, Italy, 2012: 53–58.[5] HE Jinglu, WANG Yinghua, LIU Hongwei, et al. A novel automatic PolSAR ship detection method based on superpixel-level local information measurement[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 15(3): 384–388. doi: 10.1109/LGRS.2017.2789204[6] DENG Jia, DONG Wei, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]. 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 248–255.[7] EVERINGHAM M, VAN GOOL L, WILLIAMS C K I, et al. The PASCAL Visual Object Classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303–338. doi: 10.1007/s11263-009-0275-4[8] LIN T Y, MAIRE M, BELONGIE S, et al. Microsoft coco: Common objects in context[C]. The 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 740–755.[9] XIA Guisong, BAI Xiang, DING Jian, et al. DOTA: A large-scale dataset for object detection in aerial images[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3974–3983.[10] ZHANG Yuanlin, YUAN Yuan, FENG Yachuang, et al. Hierarchical and robust convolutional neural network for very high-resolution remote sensing object detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(8): 5535–5548. doi: 10.1109/TGRS.2019.2900302[11] LONG Yang, GONG Yiping, XIAO Zhifeng, et al. Accurate object localization in remote sensing images based on convolutional neural networks[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(5): 2486–2498. doi: 10.1109/TGRS.2016.2645610[12] XIAO Zhifeng, LIU Qing, TANG Gefu, et al. Elliptic Fourier transformation-based histograms of oriented gradients for rotationally invariant object detection in remote-sensing images[J]. International Journal of Remote Sensing, 2015, 36(2): 618–644. doi: 10.1080/01431161.2014.999881[13] LI Jianwei, QU Changwen, and SHAO Jiaqi. Ship detection in SAR images based on an improved faster R-CNN[C]. 2017 SAR in Big Data Era: Models, Beijing, China, 2017: 1–6.[14] HUANG Lanqing, LIU Bin, LI Boying, et al. OpenSARShip: A dataset dedicated to Sentinel-1 ship interpretation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(1): 195–208. doi: 10.1109/JSTARS.2017.2755672[15] WANG Yuanyuan, WANG Chao, ZHANG Hong, et al. A SAR dataset of ship detection for deep learning under complex backgrounds[J]. Remote Sensing, 2019, 11(7): 765. doi: 10.3390/rs11070765[16] 张庆君. 高分三号卫星总体设计与关键技术[J]. 测绘学报, 2017, 46(3): 269–277. doi: 10.11947/j.AGCS.2017.20170049ZHANG Qingjun. System design and key technologies of the GF-3 satellite[J]. Acta Geodaetica et Cartographica Sinica, 2017, 46(3): 269–277. doi: 10.11947/j.AGCS.2017.20170049[17] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 21–37.[18] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779–788.[19] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2999–3007.[20] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587.[21] GIRSHICK R. Fast R-CNN[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448.[22] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]. The 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 91–99.[23] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 936–944.[24] LIU Peng and JIN Yaqiu. A study of ship rotation effects on SAR image[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(6): 3132–3144. doi: 10.1109/TGRS.2017.2662038[25] JIAO Jiao, ZHANG Yue, SUN Hao, et al. A densely connected end-to-end neural network for multiscale and multiscene SAR ship detection[J]. IEEE Access, 2018, 6: 20881–20892. doi: 10.1109/ACCESS.2018.2825376 -

Proportional views

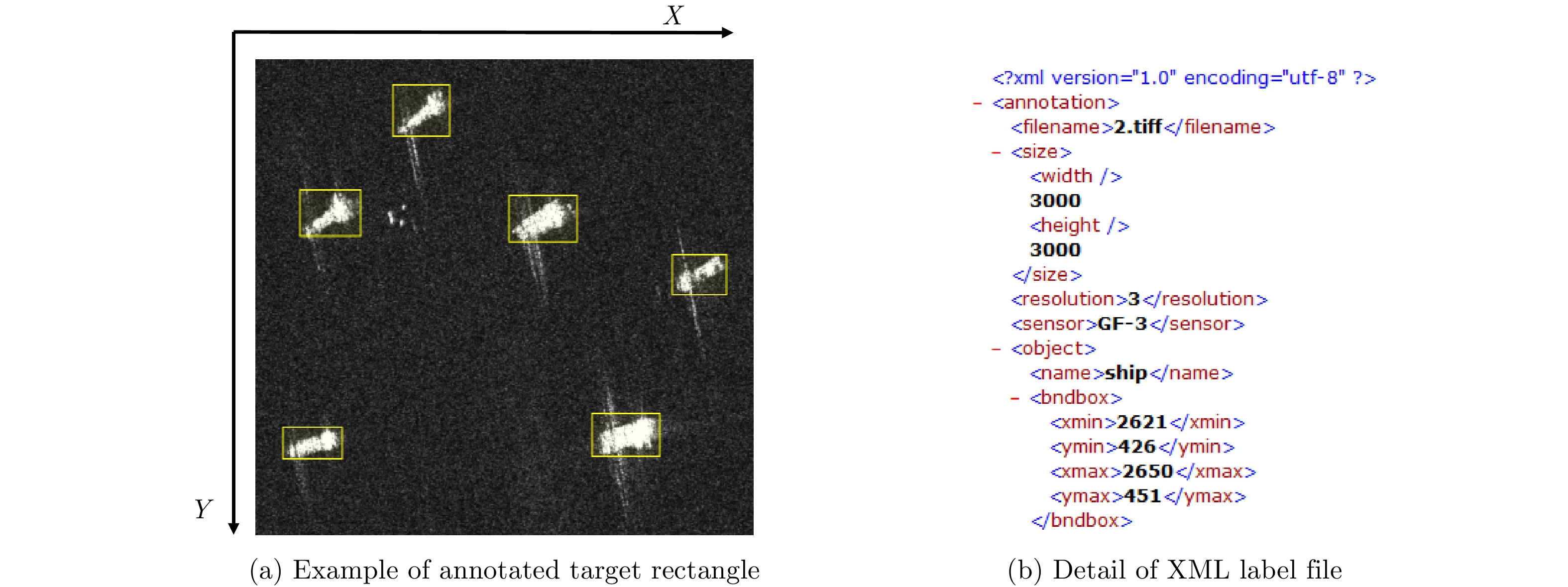

- Figure 1. The annotated example in the dataset

- Figure 2. The example scenes of AIR-SARShip-1.0 dataset

- Figure 3. The area distribution of ship rectangle in the dataset

- Figure 4. Imaging examples of the same area at different angles

- Figure 5. The examples of rotated images

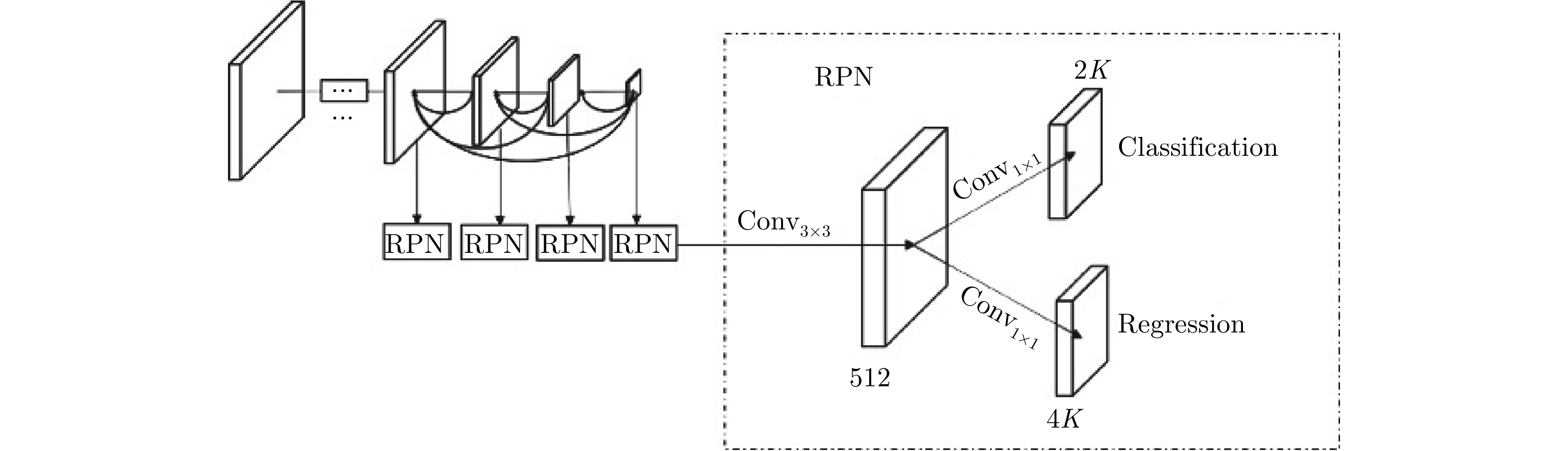

- Figure 6. The main structure of DCENN network

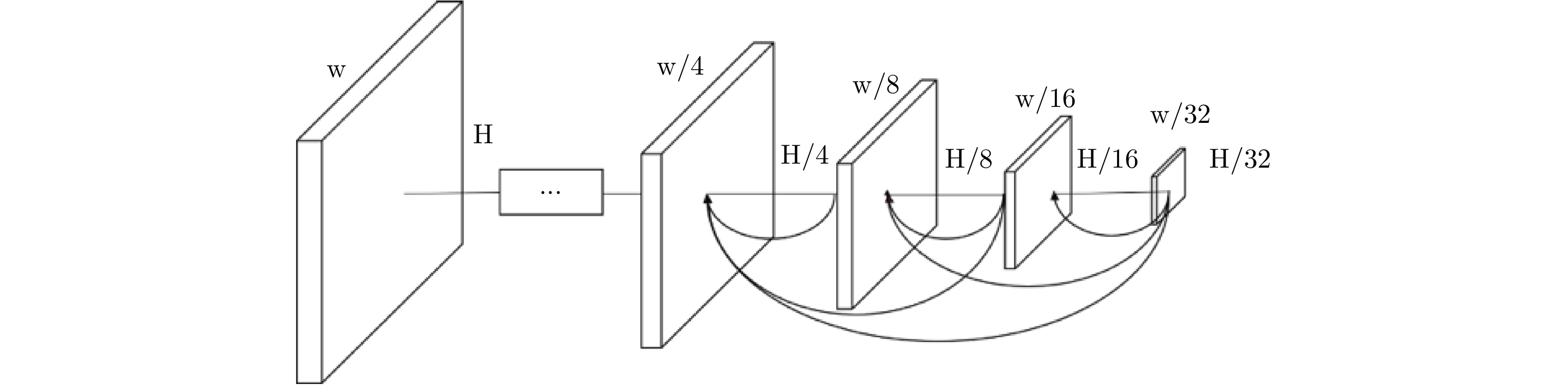

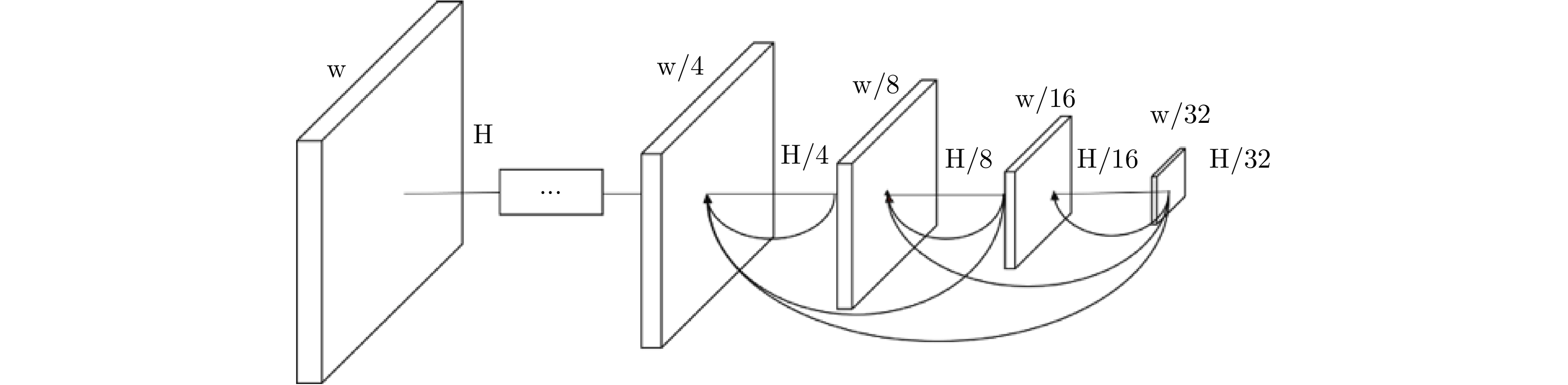

- Figure 7. The fusion feature map based on dense connection

- Figure 8. The detection example of SAR ship based on Faster-RCNN

- Figure 1. Release address of AIR-SARShip-1.0 dataset

- Figure 1.

- Figure 2.

- Figure 3.

- Figure 4.

- Figure 5.

- Figure 6.

- Figure 7.

- Figure 8.

- Figure App.

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: